From Persona to Personalization: A Survey on Role-Playing Language Agents

postJiangjie Chen, Xintao Wang, Rui Xu, Siyu Yuan, Yikai Zhang, Wei Shi, Jian Xie, Shuang Li, Ruihan Yang, Tinghui Zhu, Aili Chen, Nianqi Li, Lida Chen, Caiyu Hu, Siye Wu, Scott Ren, Ziquan Fu, Yanghua Xiao

Published: 2024-10-09

🔥 Key Takeaway:

The most powerful way to shape an AI persona isn’t by changing its training data or fine-tuning—it’s by dropping a tiny prompt hint about who it is; a single sentence can override billions of learned parameters, meaning prompt engineering beats model engineering when it comes to steering behavior.

🔮 TLDR

This survey reviews how AI language models can simulate different types of personas—demographic groups, well-known characters, and individual users—for applications like market research and product testing. It highlights that the most effective virtual audiences use personas grounded in real data (census, bios, user logs), and that models can be made more realistic through fine-tuning on relevant conversations or by dynamically providing context and memory of prior interactions. The paper notes that assigning personas improves the depth and diversity of responses, but warns that such simulations can sometimes amplify biases or generate unrealistic behaviors if personas are too simple or data is unbalanced. Accurate market simulations should capture holistic persona diversity (not just single attributes), use both static (pre-trained) and dynamic (prompted) conditioning, and evaluate outputs via both automated and human methods. Key risks include bias, hallucination, privacy leaks, and lack of true social intelligence compared to humans. For actionable improvements, the authors suggest: use detailed, multi-attribute persona profiles; regularly update agent memory with new interactions; incorporate methods to measure and reduce bias and hallucination; and consider ensemble querying or pass-at-k sampling for higher fidelity. The paper also includes a taxonomy of dozens of published datasets and systems (see page 12 and Table 4 on page 47) that can be used as reference points for benchmarking and improving AI audience simulators.

📊 Cool Story, Needs a Graph

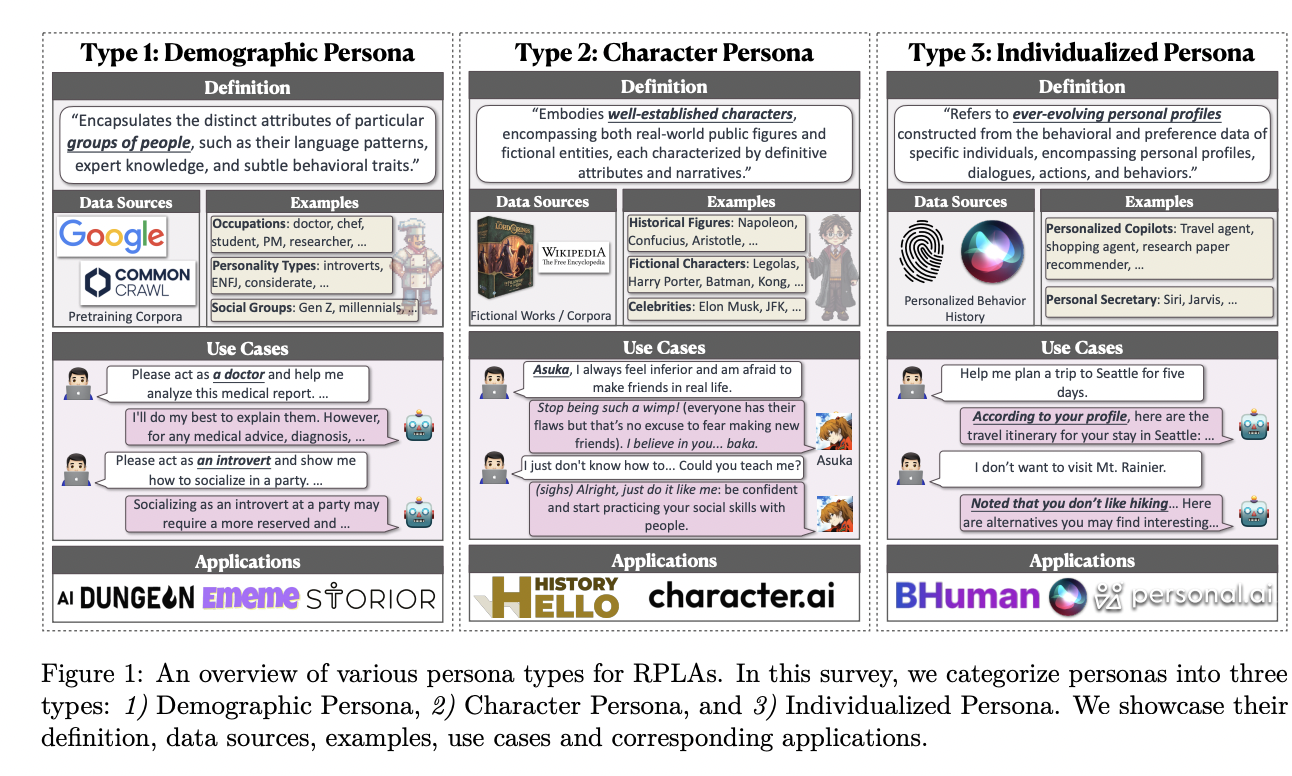

Figure 1: An overview of various persona types for RPLAs

Caption: Categorization and examples of AI personas used in Role-Playing Language Agents (RPLAs).

This figure outlines the three primary types of AI personas simulated by Role-Playing Language Agents: (1) Demographic Personas, based on group traits like occupation or personality type (e.g., doctor, introvert); (2) Character Personas, which simulate well-known real or fictional individuals (e.g., Elon Musk, Harry Potter); and (3) Individualized Personas, built from ongoing interactions and user data (e.g., personalized digital assistants). It shows how these personas differ in data sources, use cases, and construction methods. This is central to the paper's argument that AI personas must be differentiated by their data-driven origins and roles, as each type poses unique challenges for fidelity, safety, and application accuracy in synthetic settings."

⚔️ The Operators Edge

One critical but easily overlooked detail in this study is that the most *effective* persona conditioning comes not from elaborate backstories or fine-tuned personality blends, but from *short, sharp prompts that trigger latent statistical stereotypes already embedded in the model*. This works because large language models are pretrained on massive corpora filled with patterns of how certain demographics, roles, and archetypes typically behave. By simply activating those priors—e.g., “you’re an extroverted partygoer”—the model shifts its behavior more dramatically and predictably than it does when given rich but ambiguous narrative detail.

Why it matters: Many practitioners assume realism in AI personas depends on adding *more* nuance and complexity—when in fact, shorter prompts that efficiently trigger the model’s internal statistical biases tend to create more consistent and differentiated outputs. This flips the conventional wisdom and highlights prompt compression, not expansion, as the real control surface for behavioral diversity in simulations.

Example of use: A researcher building a synthetic panel to test responses to a controversial product could prompt agents with minimal archetypes—like “religiously conservative rural voter” or “climate-anxious urban Gen Z student”—to instantly access distinct, coherent worldviews that guide realistic reactions. This allows for fast, interpretable comparisons without needing extensive persona documents or fine-tuned model variants.

Example of misapplication: A UX team designing AI personas for simulated usability testing invests weeks crafting 2-page character sheets for each agent, only to find that responses feel generic or contradictory. They assume the model is underpowered or insufficiently trained, when in reality, it’s being overloaded with mixed cues. Had they instead used a single, sharp descriptor per persona (e.g., “impatient engineer with little tolerance for slow apps”), the model would have produced far more differentiated and useful feedback.

🗺️ What are the Implications?

• Use real demographic and behavioral data to shape your AI personas: Simulations that use authentic data sources—like surveys, census, or real user logs—to generate persona profiles yield more credible and actionable insights than those that use generic or fictional personas.

• Build diverse persona sets covering multiple traits, not just one or two variables: Research shows that deleting a single demographic attribute (such as age or gender) rarely changes simulated outcomes, meaning accurate studies require a holistic mix of demographic, personality, and preference traits to reflect true audience diversity.

• Leverage few-shot or prompt-based approaches to quickly adapt personas to new studies: Instead of retraining models from scratch, you can use well-crafted prompts and a small number of example questions to tailor personas to specific research tasks, which saves time and budget.

• Regularly update persona “memories” with new simulated interactions: The most realistic AI audiences are those where each persona’s responses evolve based on prior interactions or feedback, mimicking how real consumers change their minds or learn over time.

• Apply both automated and human spot-checks to simulation results: Automated scoring is useful for consistency and speed, but periodic human review helps catch off-base outputs, unrealistic biases, or hallucinations, making your findings more robust.

• Be aware of and mitigate bias amplification in AI personas: Assigning extreme or stereotypical personas can exaggerate group differences or produce toxic outputs, so always check for and correct these issues before acting on the results.

• Don’t rely solely on the newest or biggest AI model: The way you craft prompts and design the simulation has as much, if not more, impact on accuracy than the choice of model—so focus on thoughtful study design rather than chasing the latest tech.

• Use simulation for open-ended, creative, or qualitative research rather than hard factual testing: Simulated personas are more effective for exploring customer journeys, concept tests, or emotional reactions than for price sensitivity or yes/no factual questions.

📄 Prompts

Prompt Explanation: Prompt used to simulate an extroverted personality by specifying social traits.

You’re a friendly and outgoing individual who thrives on social interactions. Always ready for a good time, you enjoy being the center of attention at parties...

⏰ When is this relevant?

A financial services company wants to assess how different customer types would react to a new digital budgeting app, comparing reactions and feature preferences between three target segments: young professionals, busy parents, and retirees. The goal is to simulate qualitative feedback and identify which features or selling points resonate most, to guide both messaging and product prioritization.

🔢 Follow the Instructions:

1. Define audience segments: Create three AI persona profiles, each reflecting a real customer type. For example:

• Young professional: 26, urban, tech-savvy, wants to optimize spending and save for travel.

• Busy parent: 40, suburban, two kids, time-strapped, needs easy family expense tracking.

• Retiree: 68, rural, fixed income, cautious with technology, focused on avoiding overspending.

2. Prepare prompt template for persona simulation: Use this structure:

You are simulating a [persona description].

Here is the product being tested: ""[Insert product description here]"".

You are talking to a market researcher conducting a one-on-one interview.

Respond as yourself (the persona), in 3–5 sentences, about your honest reaction to the product and what would make you more or less likely to use it.

First question: What stands out to you about this digital budgeting app, and how do you think it would fit your life?

3. Generate responses for each persona: For each segment, run the prompt (using a language model like GPT-4 or similar) and generate 5–10 variations per persona, slightly rewording the question for variety (e.g., ""What do you like or dislike about this app?"" or ""Would you consider switching from your current budgeting method?"").

4. Conduct follow-up probing: For each response, ask 1–2 follow-up questions: e.g., ""What feature would be most useful or missing for you?"" or ""What concerns, if any, would you have about using this app?"" Continue using the persona’s voice for each answer.

5. Tag and cluster responses: Review the output and label themes such as “mentions automation,” “concerned about privacy,” “likes family tracking,” “finds it too complex,” or “interested in mobile notifications.”

6. Compare and summarize findings: Collate the main preferences and objections for each persona group, noting which features or messages consistently drive positive or negative reactions. Identify clear contrasts (e.g., retirees mention ease-of-use most; young professionals care about app integrations).

"

🤔 What should I expect?

You will have a set of realistic, segment-specific feedback highlighting top features, concerns, and motivators for each customer type. This allows easy comparison of which aspects of the product and messaging resonate best, helping prioritize product features, refine positioning, and decide where to focus further live research or marketing spend.<br>