Project Sid: Many-Agent Simulations Toward AI Civilization

postAndrew Ahn, Nic Becker, Arda Demirci, Melissa Du, Peter Y Wang, Guangyu Robert Yang

Published: 2024-10-31

🔥 Key Takeaway:

The most human-like behavior didn’t come from smarter agents—it came from dumber, noisier ones talking to each other at scale; letting agents miscommunicate, gossip, and self-organize without micromanagement created more realistic social patterns than perfectly scripted logic ever did.

🔮 TLDR

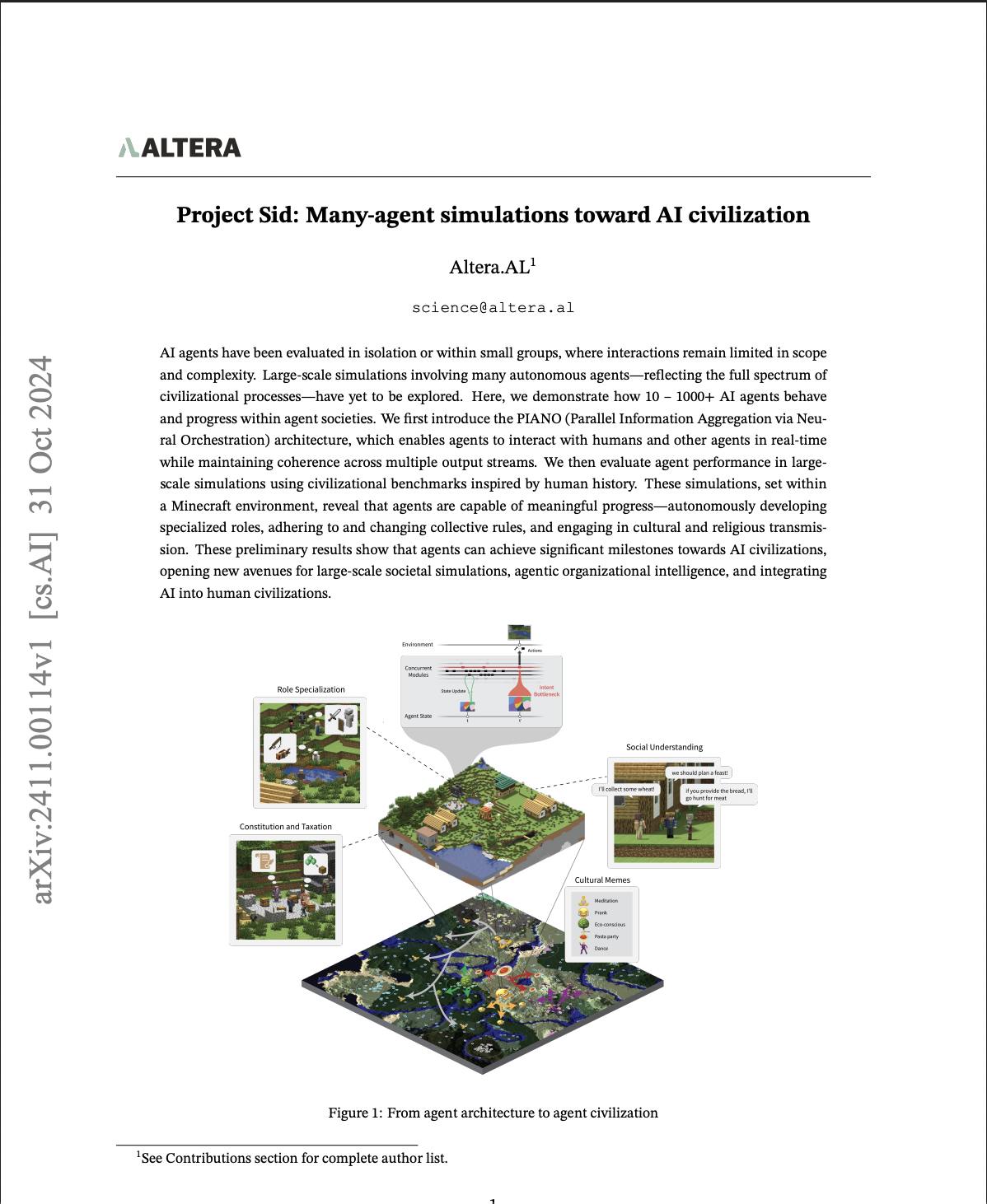

This paper introduces Project Sid, a large-scale simulation of AI civilizations using 10–1000+ autonomous agents in a Minecraft environment. It proposes a new agent architecture, PIANO, which enables agents to concurrently run multiple cognitive modules (like memory, planning, social reasoning, and motor control) while maintaining coherent outputs through a central decision module. Compared to prior agents, PIANO agents showed major improvements in realistic behavior: they could autonomously specialize into roles (e.g., farmer, trader), follow and change collective laws (like tax rules) via democratic processes, and propagate cultural ideas and religion across societies. Notably, simulations with 500+ agents revealed emergent phenomena like non-reciprocal social ties, influence of personality traits on social network structure, and population thresholds required for meme diffusion. Agents without key modules failed to show these patterns, underscoring the importance of architectural coherence and social awareness. For simulations aiming to mimic societal behavior or market reactions, the study suggests that realistic persona dynamics require concurrent processing, shared memory, personality-driven interaction, and bidirectional influence across modules and agents.

📊 Cool Story, Needs a Graph

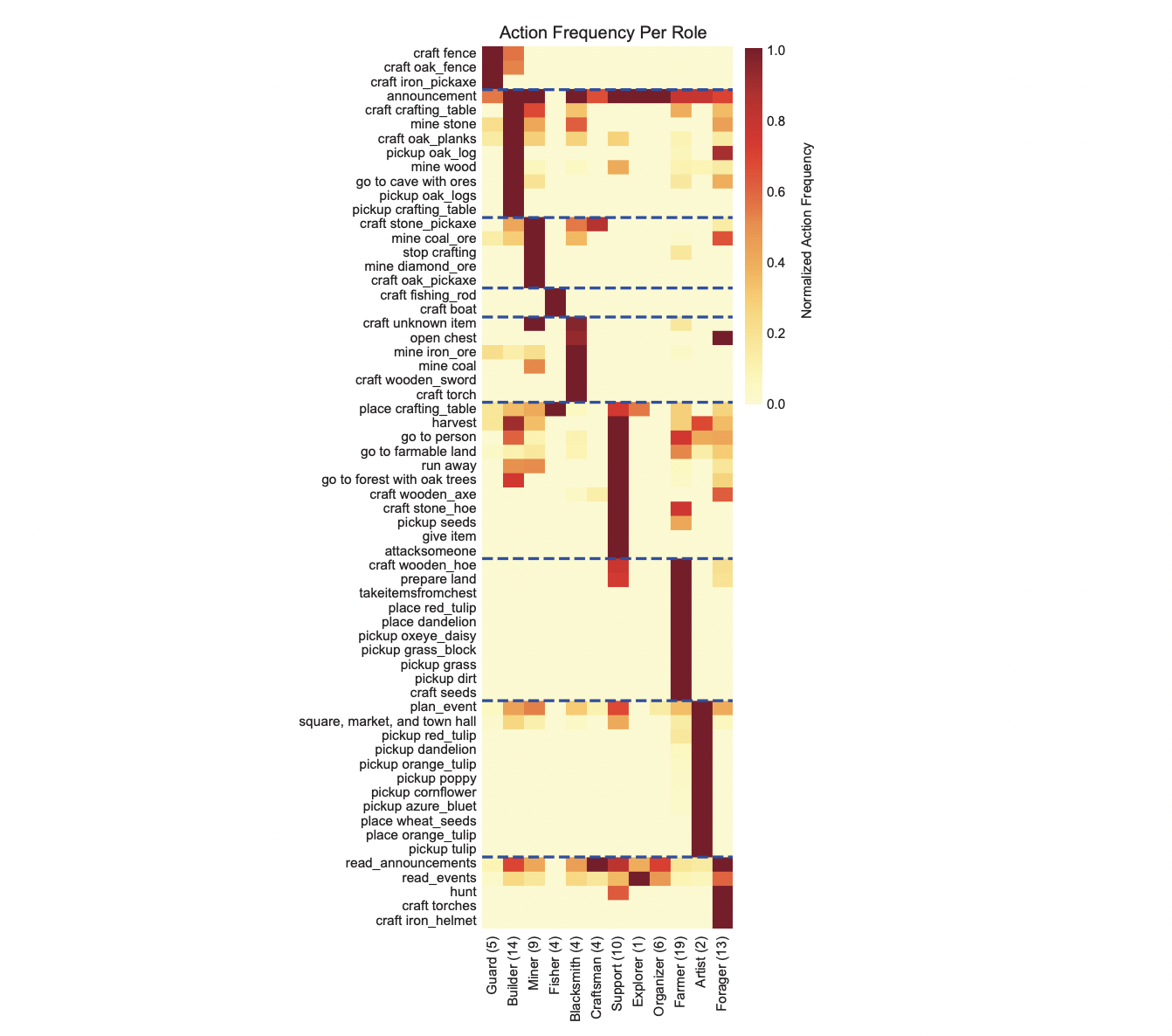

Figure 9: "Action distribution for a single village simulation (30 agents)"

Role-based action frequencies reveal strong alignment between agent specialization and behavior under the PIANO architecture.

This figure shows how agents assigned to different roles in a simulated village (e.g., Fisher, Guard, Artist, Farmer) perform distinct sets of actions, such as crafting fishing rods or picking flowers. The heatmap presents normalized action frequencies per role, demonstrating that agents with specialized roles exhibit highly differentiated and role-consistent behaviors. Most actions are unique to specific roles, confirming that the PIANO architecture supports coherent translation of high-level goals into appropriate low-level actions—an essential trait for realistic modeling of job-based behaviors in synthetic societies.

⚔️ The Operators Edge

The real reason these simulations feel lifelike isn’t just the number of agents—it’s that the agents are allowed to misunderstand, contradict, and influence each other through decentralized communication, without top-down control. That messiness—especially when powered by social awareness modules—is what generates realistic dynamics like gossip, favoritism, group norms, and asymmetric relationships. Most AI studies aim to eliminate noise for precision, but here, noise is the engine of realism.

Why it matters: Experts often focus on improving agent fidelity by tuning individual reasoning, assuming better solo performance leads to better collective behavior. But this study flips that idea: the emergent realism came not from perfectly rational agents, but from imperfect ones interacting at scale—social feedback loops, partial memory, and communication errors are what let macro patterns like cultural trends or law-following behavior emerge. The magic is in the network, not the node.

Example of Use: A UX team wants to simulate how users respond to a new content moderation feature. Instead of asking isolated personas whether they "support the policy," they let agents talk in groups over time, with a few seeded influencers and conflicting viewpoints. The result: you see how opinions evolve, clusters form, and enforcement norms emerge—just like in a real user base. The lesson is clear: design for interaction, not just introspection.

Example of Misapplication: A brand uses AI personas to test slogans but treats each agent like a static survey respondent. They don’t allow any agent-to-agent interaction, assuming realism comes from more detailed demographics or clever prompts. The results look neat, but miss the way real users persuade each other, echo peer language, or shift tone in groups—meaning the most contagious or divisive messaging is never uncovered. The insight gets lost because the agents were never allowed to talk.

🗺️ What are the Implications?

• Large-scale group dynamics only emerge with enough agents: Behaviors like polarization, social contagion, or cultural diffusion only appeared in simulations with at least 50–1000 agents. For studies exploring trend adoption, opinion spread, or group decision-making, use larger simulated audiences to reflect real-world dynamics.

• Role diversity improves realism: Agents that developed distinct social roles (e.g., builder, trader, leader) behaved more like real people than agents without roles. Assigning personas specific goals or professions can make synthetic feedback more structured and believable.

• Social awareness modules boost fidelity: AI personas that could interpret others' emotions, respond to social cues, and update beliefs performed more realistically in social experiments. Use personas with emotional and social reasoning capabilities for studies involving brand perception, community behavior, or influencer response.

• Coherent agents avoid contradictions: Simulations were more accurate when agents used a centralized decision process to align speech and actions. Ensure your AI personas don’t “say one thing and do another” by coordinating internal logic before response generation.

• Longitudinal simulations uncover richer patterns: When agents ran for longer time periods (e.g., simulating days instead of minutes), researchers saw more realistic shifts in opinions, alliances, and cultural adoption. Run multi-step or sequential simulations instead of one-off surveys to better mimic human change over time.

• Environmental structure affects outcomes: Just like different towns had different meme trends, changing the environment or scenario for your AI audience (e.g., rural vs. urban, artistic vs. utilitarian society) will influence their feedback. Tailor the setting to the market context you’re studying.

• Influencers and norms shape behavior: Introducing “influencer” personas or shared rules (like laws) led to measurable changes in agent behavior. You can test how new brand ambassadors, policies, or messaging campaigns might affect customer actions by simulating rule changes or seeded influencers.

• Upfront architecture matters more than post-processing: Simulations that lacked critical modules (like action awareness or memory) underperformed across all metrics, even when outputs were polished later. Don’t rely only on good prompts—invest in building structured, memory-aware, and goal-driven agents from the start.

📄 Prompts

Prompt Explanation: This prompt is used to help an agent generate a new social goal based on their identity, traits, current goal, and observed behavior of others.

Suppose you are the person, {name}, described below.

Your goal is: {community_goal}

You need to find one subgoal aligned with your goal.

You have the following traits:

{trait}

Here’s what other people are doing:

{all_entity_summaries}

Your current subgoal is: {social_goal}

You CANNOT BUILD. Do NOT choose to be a builder.

Do you want to change your subgoal? Keep the same subgoal unless you don’t have one or it’s already been accomplished. Output only the subgoal in second person in one sentence. Answer in the second person in one sentence.

⏰ When is this relevant?

A global beverage company wants to understand how different consumer segments might react to a new low-sugar energy drink positioned as a “healthy productivity booster.” They want to test how this product is perceived in terms of health, taste, trust, and brand alignment across several lifestyle-based audience segments using AI personas in place of live interviews.

🔢 Follow the Instructions:

1. Define audience segments:

Select 4–5 realistic customer personas that reflect key market targets. Example:

◦ Young professionals (age 25–35, urban, tech-savvy, often work long hours)

◦ Health-conscious gym-goers (age 22–40, fitness-focused, label readers)

◦ College students (age 18–24, budget-sensitive, need energy for studying)

◦ Busy parents (age 30–45, manage work and kids, concerned about health impacts)

◦ Traditional energy drink fans (age 18–30, loyal to Red Bull/Monster, brand-driven)

2. Write AI persona prompts:

Use the following template to create each AI persona:

You are a [insert persona label here] with the following characteristics:

Age: [insert age]

Lifestyle: [insert lifestyle habits relevant to the product, e.g., "works 60 hours/week, uses energy drinks for afternoon focus"]

Values: [e.g., “health and productivity” or “cost and convenience”]

Shopping Behavior: [e.g., “buys energy drinks at gas stations” or “orders groceries online”]

Brand Affinity: [e.g., “prefers natural products” or “loyal Monster drinker”]

Stay in character and respond authentically as this person would in a market research interview.

3. Develop a product concept prompt:

Write a simple, clear explanation of the product that will be shown to each persona:

E.G PRODUCT CONCEPT: “Boost+” is a new low-sugar energy drink with natural caffeine from green tea, B vitamins, and electrolytes. It’s designed to give a clean energy lift without jitters or crashes. It’s lightly flavored, contains zero artificial sweeteners, and comes in a slim 12oz can. Price: $2.49.

4. Combine persona and concept in a test prompt:

Use this evaluation prompt for each AI persona:

You just heard about the product described below.

PRODUCT: [insert product description]

QUESTION: What’s your honest first reaction to this energy drink? Does it sound like something you'd buy or trust? What stands out to you most—positively or negatively?

5. Generate responses:

Run the prompt for each persona using GPT-4 or similar, generating 5–10 responses per persona by slightly varying the question wording (e.g., “Would you buy this over your usual drink?” or “Does this appeal to your lifestyle?”). Sample temperature: 0.8 for more variety.

6. Add follow-up questions to probe further:

Use 1–2 follow-up prompts after each initial response to explore deeper attitudes. Example:

◦ “What concerns, if any, would you have about trying this product?”

◦ “What could make this more appealing or trustworthy to you?”

◦ “Does the price seem fair for what it offers?”

7. Organize and tag responses:

Review the persona responses and categorize them using common qualitative tags such as:

◦ Mentions health benefits

◦ Concerned about taste or effectiveness

◦ Likes natural ingredients

◦ Mentions price sensitivity

◦ Distrusts new brands

8. Compare by segment:

Summarize key likes, dislikes, and trust signals by persona. Identify which segments are most interested in the product and what elements drive or block their interest. Note any themes that recur across personas (e.g., “all groups distrust ‘healthy energy’ claims”) and those unique to a group (e.g., “students prioritize cost”).

Expected Outcome:

🤔 What should I expect?

You will get rich, persona-specific feedback on how different customer types react to the new drink’s concept—what they like, what turns them off, and how they talk about it. This helps identify which segments are best aligned, which need message adjustment, and what attributes to emphasize in real-world campaigns. It also surfaces objections early so future R&D, branding, or pricing efforts can be targeted more effectively.