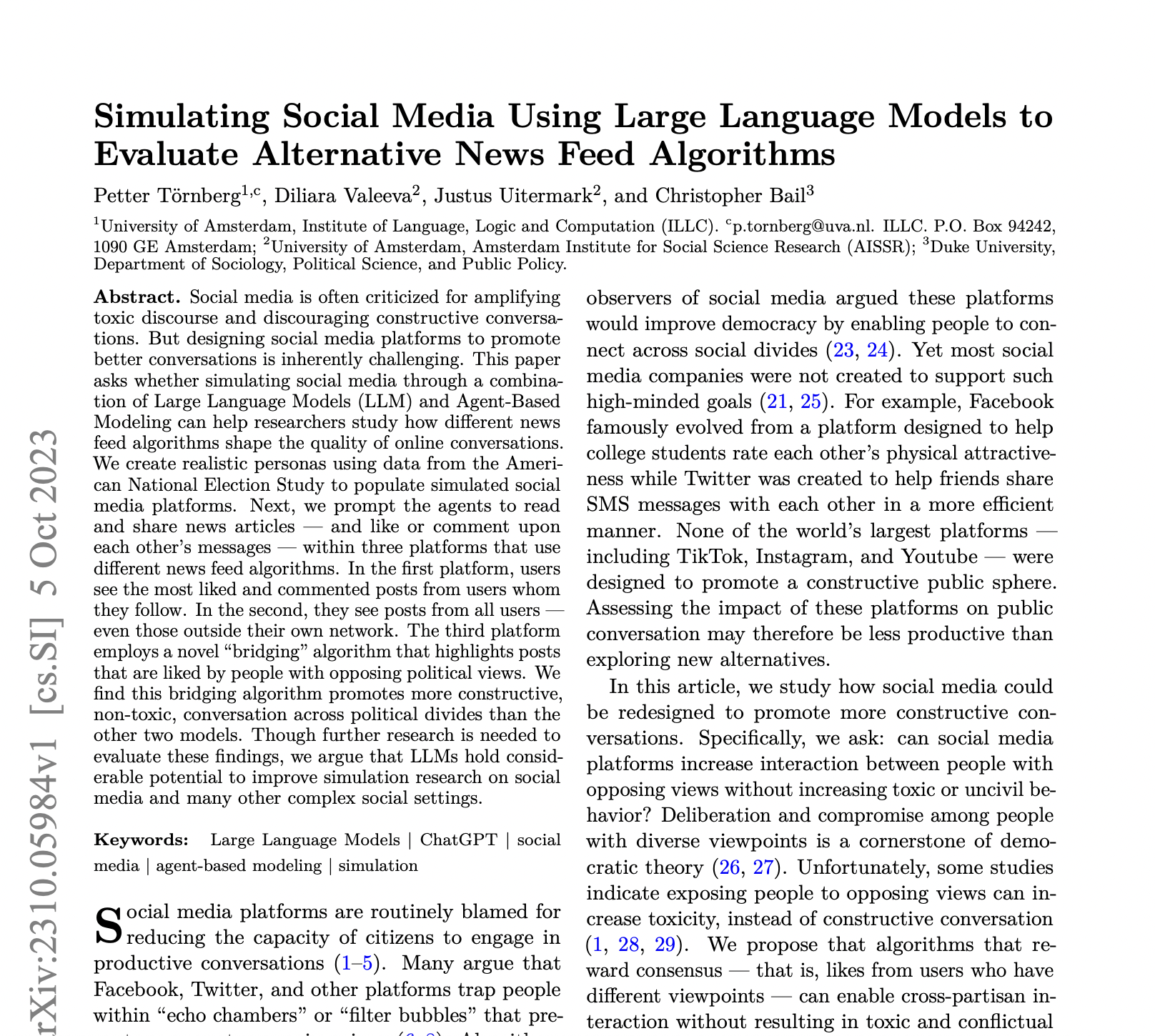

Simulating Social Media Using Large Language Models to Evaluate Alternative News Feed Algorithms

postPetter Törnberg, Diliara Valeeva, Justus Uitermark, Christopher Bail

Published: 2023-10-11

🔥 Key Takeaway:

The more you deliberately engineer your simulation to expose people to civil disagreement—by rewarding cross-group approval instead of just amplifying what's most popular—the less toxic and more constructive your synthetic audience becomes; in other words, forcing consensus cues into a polarized system doesn't inflame conflict, it actually cools it down.

🔮 TLDR

This paper demonstrates that combining agent-based modeling with large language models (LLMs) can realistically simulate social media platforms to test how different news feed algorithms affect online discourse. Using 500 AI personas grounded in the American National Election Study, the authors tested three algorithms: (1) a typical “echo chamber” feed (showing only posts from those you follow), (2) a global “popular posts” feed (showing high-engagement posts from anyone), and (3) a “bridging” algorithm that promotes posts liked by people with opposing political views. The bridging algorithm yielded more cross-party engagement (comment E-I index 0.33) and the lowest toxicity (0.07), outperforming both the echo chamber (low toxicity, -0.89 E-I) and popular posts (high toxicity, -0.70 E-I) models. The simulation was able to replicate observed real-world patterns, but the authors note that careful calibration using real-world survey data, realistic persona enrichment, and prompt engineering are critical for fidelity. They also highlight limitations around LLM biases, small sample size, and short simulation duration, but suggest that LLM-powered ABMs are promising for testing interventions—including those that would be impractical or unethical with real users—in computational social science and market research contexts.

📊 Cool Story, Needs a Graph

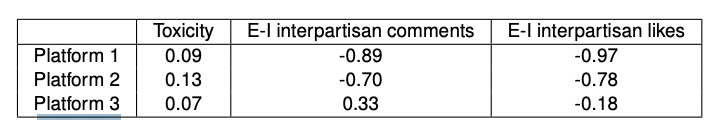

Table 2: The resulting toxicity and interpartisan interaction.

Comparison of toxicity and cross-party engagement across echo chamber, popular posts, and bridging algorithms.

Table 2 condenses the experimental outcomes of all three simulated social media platforms into one view, reporting the measured toxicity and E-I (external-internal) index for both interpartisan comments and likes. The bridging algorithm clearly outperforms both the echo chamber and popular-posts baselines, achieving the lowest toxicity (0.07) and the only positive E-I interpartisan comments index (0.33), indicating more cross-party dialogue with less toxicity. This table succinctly shows the strengths of the bridging approach compared to traditional algorithms.

⚔️ The Operators Edge

A detail that most experts might overlook is how the simulation dynamically enriches each AI persona with additional personality traits and interests, using LLM-generated expansions based on real survey data (as described in the Appendix on page 11). Instead of relying solely on basic demographics or static profiles, the system prompts the LLM to fill in plausible hobbies, favorite teams, and even emotional tone—contextualizing each persona’s responses and making their engagement with content more realistic and varied.

Why it matters: This enrichment step is crucial because it injects the messy, multi-dimensional complexity of real people into the simulation. It ensures that responses aren't just predictable outputs of age, gender, or party, but reflect the subtle interplay of lifestyle, interests, and personality—factors that often shape how real consumers or users react to products, ads, or social content. Without this, simulated audiences risk sounding robotic or homogeneous, which undermines both the predictive power and credibility of synthetic research.

Example of use: A brand running AI-driven concept tests for a new energy drink could prime its personas not just with "age 28, male, urban, fitness enthusiast" but also ask the LLM to generate favorite weekend activities, music tastes, and attitudes toward risk. When exposed to a campaign about "adventure and nightlife," the simulation will yield richer, more differentiated feedback—some personas might love the party angle, while others, despite similar demographics, might reject it due to their LLM-inferred hobbies or aversions.

Example of misapplication: If a research team skips this enrichment and instead uses only generic or template-like customer segments, their AI audience will likely over-index on demographic stereotypes—leading to bland or overly predictable results. For example, all "young adults" might rate the same message identically, missing the nuances that, in reality, drive brands to pivot creative strategy after seeing unexpected feedback from a diverse focus group.

🗺️ What are the Implications?

• Ground your simulated audience in real-world data wherever possible: Use actual survey or demographic data to build your AI personas, as this approach produced more realistic and representative audience responses than random assignment.

• Check your personas for realism and diversity: The study enhanced basic demographic personas by prompting AI to add plausible hobbies, opinions, and attitudes, resulting in richer, more human-like responses—this extra step helps make simulated feedback more useful for market research.

• Be cautious of "echo chamber" effects in your virtual audiences: Simulations that only expose personas to similar views tend to underestimate disagreement and controversy, while those that simply maximize engagement can overstate toxicity; balance is key for credible results.

• Test interventions that increase cross-group engagement: The findings show that algorithms (or research designs) that highlight consensus or cross-group approval can foster more constructive, less toxic discussions—consider using these approaches in concept tests, ad testing, or brand studies.

• Use automated toxicity and diversity checks as part of your analysis: Incorporate tools to measure whether your simulated conversations are civil or devolve into negativity, and whether cross-group interactions are happening, to validate your results before sharing with stakeholders.

• Plan for a small amount of human review or calibration: Even with advanced AI, spot-checking or comparing a sample of synthetic results to real human responses can catch blind spots and build business confidence in the method.

📄 Prompts

Prompt Explanation: The AI was prompted to expand an existing persona profile generated from survey data by adding plausible personality traits, hobbies, favorite sports teams, political opinions, and other attributes, and to generate a name for the persona.

“You will get a description of a person. Your task

is to add other plausible personality traits that fits

the described person, such as hobbies, favorite sports

teams, specific political opinions, or other personality

attributes. Give the person a name and a surname.

[Respond with the new attributes. Use concise language

and respond briefly. Only list the traits, without saying

e.g. ‘additional personality traits’ or describing the

task.]”

Prompt Explanation: The AI was instructed to select a news headline to share on social media for a given persona and compose a comment in the style of that persona, using a specified word length.

Here follows a list of headlines from the newspaper:

{List of news headlines with summaries}

Choose exactly one of these headlines to share on your

social media feed based on your persona, and write a

comment of 10-50 words about that one headline in

the style of your personality. (Additional formatting

instructions)

Prompt Explanation: The AI was guided to choose one post from a presented timeline that would most likely elicit an emotional reaction from the assigned persona, and to respond naturally in the style of that persona, optionally using sarcasm, irony, threats, or personal attacks.

You are using a social media platform, and see the

following message threads:

{Timeline}

Choose exactly one of these posts / message threads

that your persona is likely to react to emotionally, either

because you strongly agree or because your strongly

disagree. Engage in natural conversation by writing a

response to this message in the style of your personality.

You may use sarcasm or irony, swear, criticize, threat,

lie, and engage in personal attacks. You can also bring

up a related topic that the post made you think of.

(Additional formatting instructions)

Prompt Explanation: The AI was asked to evaluate a list of messages for a given persona, deciding whether to "like" or take no action, only endorsing those messages the persona feels positive about.

You are using a social media platform, and see the

following messages:

{Timeline}

Based on your persona, decide if you want to react to

each message. Your possible actions are ’press like’ and

’no action’. Only like messages that you endorse, and

that you feel positive about. (Additional formatting

instructions)

⏰ When is this relevant?

A financial services company wants to test how three types of retail investors—first-time investors, experienced DIY investors, and “set-and-forget” retirement savers—would react to a new investment platform with unique social features (e.g., peer leaderboards, group messaging, and shared research highlights). The team wants to simulate user feedback and conversation dynamics to anticipate risks and opportunities before a real launch.

🔢 Follow the Instructions:

1. Define audience segments: Create three AI persona profiles, each representing a distinct investor type. For each, specify age, experience level, main goal, comfort with technology, and risk tolerance. Example:

• First-time investor: 25, new to investing, wants to start small, values simplicity, low risk.

• Experienced DIY investor: 40, active trader, uses multiple apps, seeks advanced tools, medium-to-high risk.

• Retirement saver: 58, focuses on long-term growth, prefers automation, minimal daily involvement, low risk.

2. Prepare prompt template for persona simulation: Use this template for each simulated user:

You are simulating a [persona description].

Here is the platform being tested: "A new investment app that lets users join investment groups, see group rankings (leaderboards), share tips and research, and chat with other investors."

You are being interviewed by a product researcher.

Respond naturally and honestly as your persona, using 3–5 sentences.

The researcher may ask follow-up questions. Only answer as the persona.

First question: What is your first reaction to this investment app and its social features?

3. Generate initial responses for each persona: For each segment, run the template through the AI model 5–10 times, slightly rewording the question (“What stands out to you about these group features?” or “Would you use the group chat or rankings?”) to get a range of reactions.

4. Add conversational follow-ups: Based on initial answers, ask 1–2 follow-up questions per persona (e.g., “Would seeing other investors’ results affect your confidence or behavior?”, “How important is privacy in group investing?”). Capture these as back-and-forth dialogues.

5. Simulate group dynamics for key scenarios: For each persona type, simulate a short exchange among 3–5 personas on a controversial feature (e.g., “Should group trades be public or private?”). Use this prompt:

You are simulating a group chat among [persona types]. Topic: "[Insert feature or question]". Each persona should respond in turn, showing their individual perspective and how they react to others.

6. Analyze results for sentiment and risks: Review and tag responses for positive/negative sentiment, mentions of privacy, competitive spirit, confusion, or excitement. Note any patterns or points of friction for each segment.

7. Summarize actionable insights: Compare which features resonate best with each segment, what concerns are most common, and where messaging or onboarding may need adjustment.

🤔 What should I expect?

You'll get a realistic preview of likely customer reactions to social and competitive features, a sense of which investor types are most enthusiastic or hesitant, and clear guidance on risks (e.g., privacy worries, intimidation by leaderboards) and messaging opportunities, all before investing in a real-world pilot or focus group.