Back to n8n Workflows

Rhys Fisher

Rhys Fisher

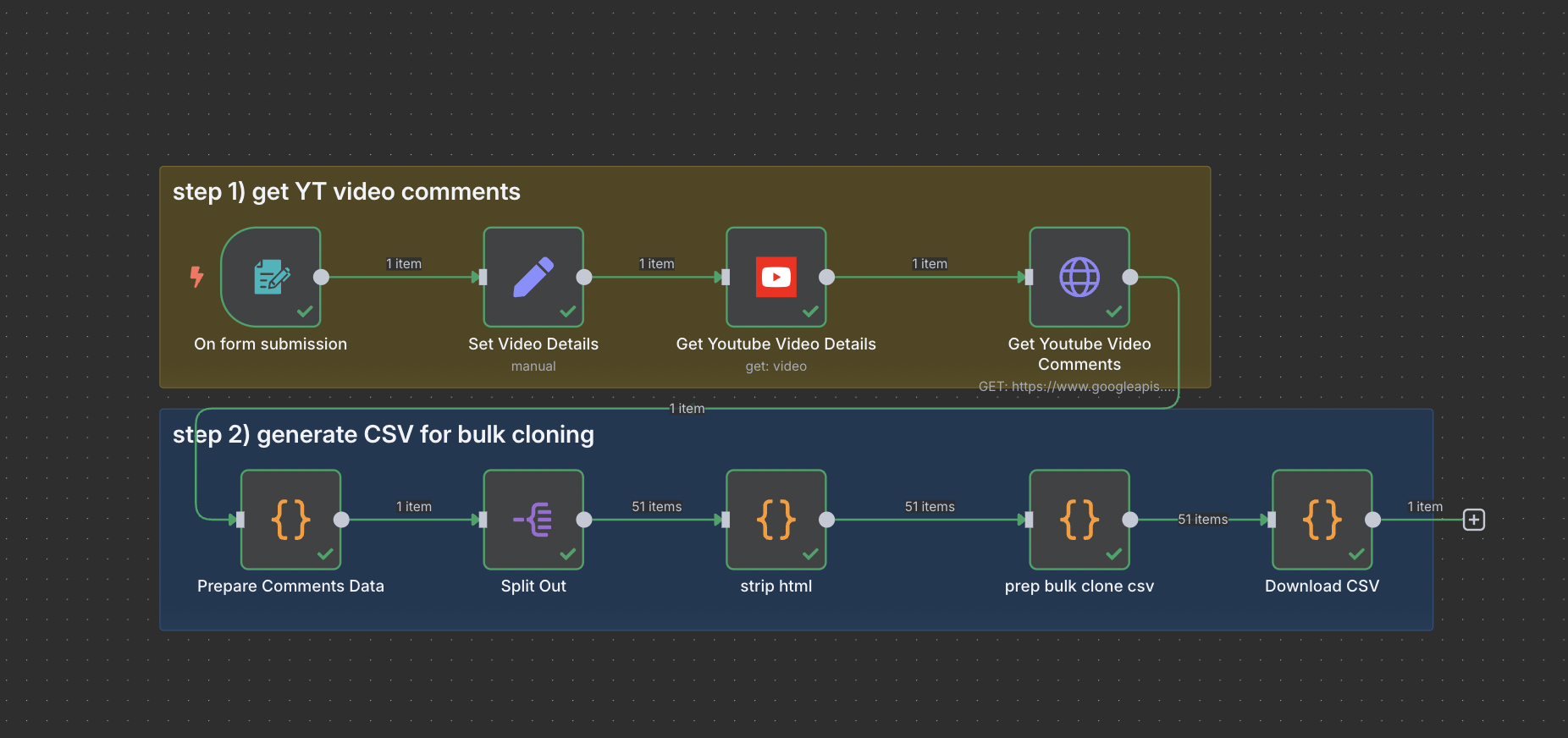

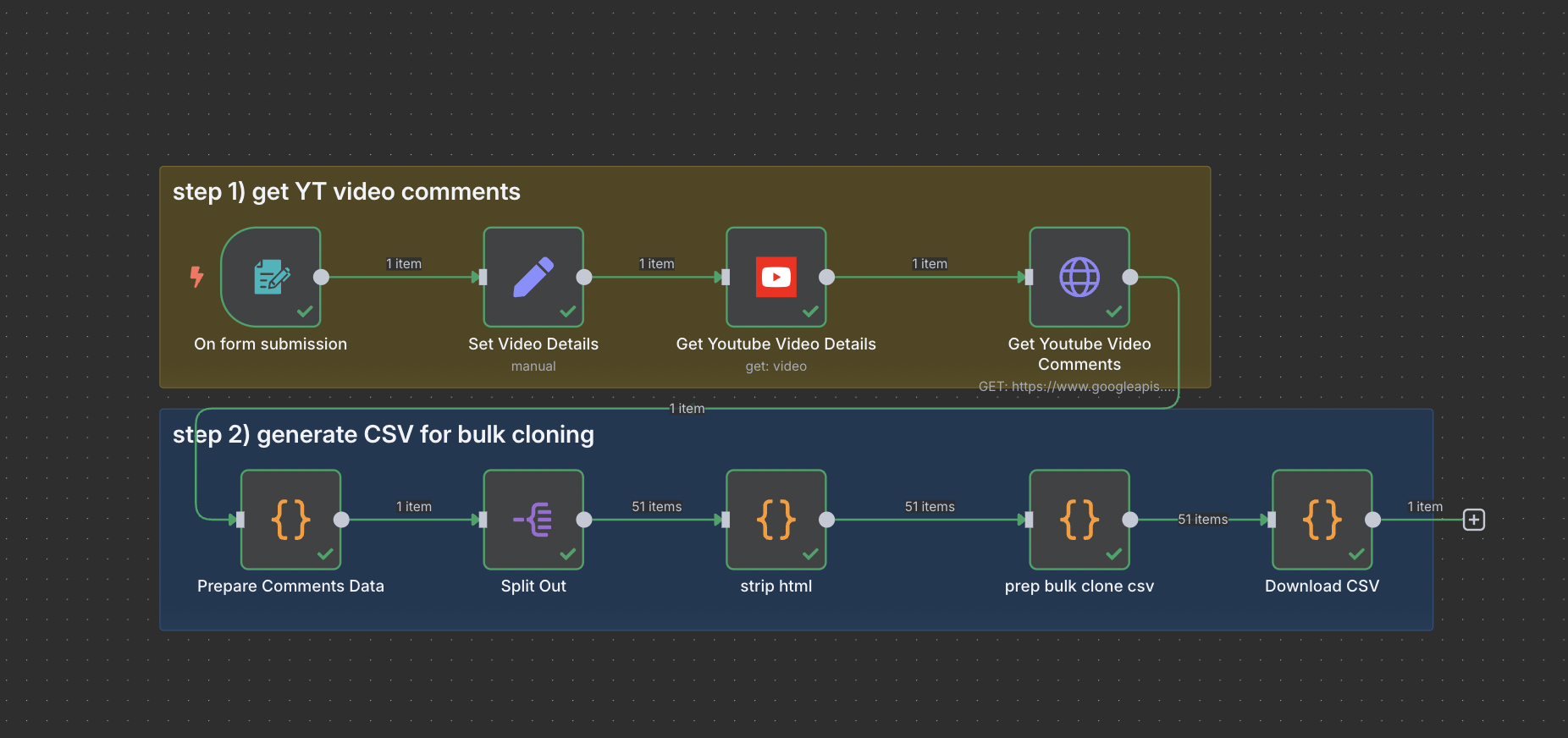

Generate Bulk Clone CSV From YouTube Comments

Rhys Fisher

Rhys Fisher

Extract 100 Youtube comments and convert them into a correctly formatted CSV ready for bulk cloning. Simply paste a YouTube Video ID and download the end CSV, then upload it straight into AskRally audience generator.

Click to expand

Summarize in

OR

Summarize in

OR

🚀 n8n Workflow Template

{

"active": false,

"connections": {

"Get Youtube Video Comments": {

"main": [

[

{

"index": 0,

"node": "Prepare Comments Data",

"type": "main"

}

]

]

},

"Get Youtube Video Details": {

"main": [

[

{

"index": 0,

"node": "Get Youtube Video Comments",

"type": "main"

}

]

]

},

"On form submission": {

"main": [

[

{

"index": 0,

"node": "Set Video Details",

"type": "main"

}

]

]

},

"Prepare Comments Data": {

"main": [

[

{

"index": 0,

"node": "Split Out",

"type": "main"

}

]

]

},

"Set Video Details": {

"main": [

[

{

"index": 0,

"node": "Get Youtube Video Details",

"type": "main"

}

]

]

},

"Split Out": {

"main": [

[

{

"index": 0,

"node": "strip html",

"type": "main"

}

]

]

},

"prep bulk clone csv": {

"main": [

[

{

"index": 0,

"node": "Download CSV",

"type": "main"

}

]

]

},

"strip html": {

"main": [

[

{

"index": 0,

"node": "prep bulk clone csv",

"type": "main"

}

]

]

}

},

"id": "z1ZF1xnbQlsLslbT",

"meta": {

"instanceId": "7921b3cd29c1121b3ec4f2177acf06fe1f1325838297f593db7db4e9563eb98d",

"templateCredsSetupCompleted": true

},

"name": "Youtube Top Comment Bulk Clone CSV Prep",

"nodes": [

{

"id": "8f291a6f-b523-4db4-93ca-d8f643d399cc",

"name": "Set Video Details",

"notes": "Prepares video ID and sets max comments limit (100)",

"parameters": {

"assignments": {

"assignments": [

{

"id": "219795ef-daa4-4444-9865-c5d3856be63b",

"name": "videoId",

"type": "string",

"value": "={{ $json[\u0027Youtube Video ID\u0027] }}"

},

{

"id": "cd4f519d-4c84-496c-8974-29ef69c890fc",

"name": "maxComments ",

"type": "number",

"value": 100

}

]

},

"options": {}

},

"position": [

-1000,

320

],

"type": "n8n-nodes-base.set",

"typeVersion": 3.4

},

{

"credentials": {

"youTubeOAuth2Api": {

"id": "HLjrqi36xLQPdjoE",

"name": "YouTube account"

}

},

"id": "c9f954bd-ad28-4149-b829-cd7449a45afa",

"name": "Get Youtube Video Details",

"notes": "Fetches video metadata including title, channel name, and other details",

"parameters": {

"operation": "get",

"options": {},

"resource": "video",

"videoId": "={{ $json.videoId }}"

},

"position": [

-760,

320

],

"type": "n8n-nodes-base.youTube",

"typeVersion": 1

},

{

"credentials": {

"youTubeOAuth2Api": {

"id": "HLjrqi36xLQPdjoE",

"name": "YouTube account"

}

},

"id": "413d607c-c610-4614-9287-1eed0d212e10",

"name": "Get Youtube Video Comments",

"notes": "Retrieves top 100 comments ordered by relevance using YouTube API",

"parameters": {

"authentication": "predefinedCredentialType",

"nodeCredentialType": "youTubeOAuth2Api",

"options": {},

"queryParameters": {

"parameters": [

{

"name": "part",

"value": "snippet"

},

{

"name": "videoId",

"value": "={{ $(\u0027Set Video Details\u0027).item.json.videoId }}"

},

{

"name": "maxResults",

"value": "100"

},

{

"name": "order",

"value": "relevance"

}

]

},

"sendQuery": true,

"url": "https://www.googleapis.com/youtube/v3/commentThreads"

},

"position": [

-460,

320

],

"type": "n8n-nodes-base.httpRequest",

"typeVersion": 4.2

},

{

"id": "1d026110-c086-4a3b-9976-906a25e147be",

"name": "Prepare Comments Data",

"notes": "Processes raw comments: extracts text, calculates stats, performs basic sentiment analysis, limits to 50 comments for AI",

"parameters": {

"jsCode": "// Get comments from HTTP Request node\nconst comments = $input.first().json.items\n//const comments = response.items || [];\n\n// Get video title from the YouTube node (step 5)\n//const videoData = ;\nconst videoTitle = $(\u0027Get Youtube Video Details\u0027).first().json.snippet.title;\n\n// Extract comment data\nconst processedComments = comments.map(item =\u003e {\n const comment = item.snippet.topLevelComment.snippet;\n return {\n text: comment.textDisplay,\n author: comment.authorDisplayName,\n likes: comment.likeCount || 0,\n publishedAt: comment.publishedAt,\n replyCount: item.snippet.totalReplyCount || 0\n };\n});\n\n// Calculate statistics\nconst totalComments = processedComments.length;\nconst totalLikes = processedComments.reduce((sum, c) =\u003e sum + c.likes, 0);\nconst avgLikes = totalComments \u003e 0 ? (totalLikes / totalComments).toFixed(2) : 0;\nconst totalReplies = processedComments.reduce((sum, c) =\u003e sum + c.replyCount, 0);\n\n// Get top comments by likes\nconst topComments = processedComments\n .sort((a, b) =\u003e b.likes - a.likes)\n .slice(0, 5);\n\n// Prepare comment texts for AI analysis\nconst commentTexts = processedComments\n .slice(0, 50) // Limit to 50 comments for AI analysis\n .map(c =\u003e c.text)\n .join(\u0027\\n---\\n\u0027);\n\n// Basic sentiment analysis (count positive/negative keywords)\nconst positiveWords = [\u0027love\u0027, \u0027great\u0027, \u0027awesome\u0027, \u0027amazing\u0027, \u0027excellent\u0027, \u0027good\u0027, \u0027fantastic\u0027, \u0027helpful\u0027, \u0027thanks\u0027];\nconst negativeWords = [\u0027hate\u0027, \u0027terrible\u0027, \u0027awful\u0027, \u0027bad\u0027, \u0027worst\u0027, \u0027horrible\u0027, \u0027useless\u0027, \u0027waste\u0027];\n\nlet positiveCount = 0;\nlet negativeCount = 0;\n\nprocessedComments.forEach(comment =\u003e {\n const lowerText = comment.text.toLowerCase();\n positiveWords.forEach(word =\u003e {\n if (lowerText.includes(word)) positiveCount++;\n });\n negativeWords.forEach(word =\u003e {\n if (lowerText.includes(word)) negativeCount++;\n });\n});\n\nreturn [{\n json: {\n videoTitle,\n totalComments,\n avgLikes,\n totalReplies,\n topComments,\n commentTexts,\n processedComments,\n sentimentCounts: {\n positive: positiveCount,\n negative: negativeCount,\n neutral: totalComments - positiveCount - negativeCount\n }\n }\n}];\n\n"

},

"position": [

-1240,

560

],

"type": "n8n-nodes-base.code",

"typeVersion": 2

},

{

"id": "74165240-c640-4b38-a86a-72093c4613e6",

"name": "On form submission",

"parameters": {

"formDescription": "We\u0027ll turn Youtube comments into AI personas",

"formFields": {

"values": [

{

"fieldLabel": "Youtube Video ID"

}

]

},

"formTitle": "Clone Youtube Commenters",

"options": {}

},

"position": [

-1260,

320

],

"type": "n8n-nodes-base.formTrigger",

"typeVersion": 2.2,

"webhookId": "edac4171-af91-488b-acd6-abd3158ad53a"

},

{

"id": "0e0cb510-43ce-4b3e-80e9-4f1bea5dc180",

"name": "Split Out",

"parameters": {

"fieldToSplitOut": "processedComments",

"options": {

"destinationFieldName": "="

}

},

"position": [

-1000,

560

],

"type": "n8n-nodes-base.splitOut",

"typeVersion": 1

},

{

"id": "17eb31bb-f6fb-470e-8273-0d9f9772d543",

"name": "prep bulk clone csv",

"parameters": {

"jsCode": "// incoming items = one item per comment, each with a `text` field\nconst columns = [\n \u0027name\u0027,\u0027age\u0027,\u0027gender\u0027,\u0027location\u0027,\n \u0027occupation\u0027,\u0027income\u0027,\u0027background\u0027,\u0027data\u0027\n];\n\nconst rows = items.map(i =\u003e {\n const r = {};\n columns.forEach(c =\u003e r[c] = \u0027\u0027); // blank out every column\n r.data = i.json.text; // but fill `data`\n return r;\n});\n\n// hand the array forward so the Spreadsheet node can write it\nreturn rows.map(r =\u003e ({ json: r }));\n"

},

"position": [

-460,

560

],

"type": "n8n-nodes-base.code",

"typeVersion": 2

},

{

"id": "ca564346-940e-4993-9f66-6fae32fcce29",

"name": "strip html",

"parameters": {

"jsCode": "const decode = html =\u003e {\n return html\n .replace(/\u0026quot;/g, \u0027\"\u0027)\n .replace(/\u0026#39;/g, \"\u0027\")\n .replace(/\u0026amp;/g, \u0027\u0026\u0027)\n .replace(/\u0026lt;/g, \u0027\u003c\u0027)\n .replace(/\u0026gt;/g, \u0027\u003e\u0027)\n .replace(/\u003cbr\\s*\\/?\u003e/gi, \u0027\\n\u0027) // convert \u003cbr\u003e to newlines\n .replace(/\u003c[^\u003e]*\u003e/g, \u0027\u0027) // remove all remaining HTML tags\n .trim();\n};\n\nreturn items.map(item =\u003e {\n const cleanText = decode(item.json.text);\n return {\n json: {\n ...item.json,\n text: cleanText // or put it in a new field like `clean`\n }\n };\n});\n"

},

"position": [

-760,

560

],

"type": "n8n-nodes-base.code",

"typeVersion": 2

},

{

"id": "c79c853a-257d-4424-ae1e-d245eaa63e6b",

"name": "Download CSV",

"parameters": {

"jsCode": "const columns = Object.keys(items[0].json);\nconst header = columns.join(\u0027,\u0027) + \u0027\\n\u0027;\n\nconst rows = items.map(i =\u003e {\n return columns.map(c =\u003e {\n const val = i.json[c] || \u0027\u0027;\n return `\"${val.replace(/\"/g, \u0027\"\"\u0027)}\"`;\n }).join(\u0027,\u0027);\n});\n\nconst csv = header + rows.join(\u0027\\n\u0027);\n\nreturn [{\n binary: {\n data: {\n data: Buffer.from(csv).toString(\u0027base64\u0027),\n mimeType: \u0027text/csv\u0027,\n fileName: \u0027rally_bulk_clone.csv\u0027\n }\n }\n}];\n"

},

"position": [

-220,

560

],

"type": "n8n-nodes-base.code",

"typeVersion": 2

},

{

"id": "23b30bdf-768d-4bc2-bf95-76e46e1e8ecb",

"name": "Sticky Note",

"parameters": {

"content": "## step 1) get YT video comments",

"height": 220,

"width": 1040

},

"position": [

-1320,

260

],

"type": "n8n-nodes-base.stickyNote",

"typeVersion": 1

},

{

"id": "992a49f3-7341-4eeb-9476-d65670780de8",

"name": "Sticky Note1",

"parameters": {

"color": 5,

"content": "## step 2) generate CSV for bulk cloning",

"height": 220,

"width": 1260

},

"position": [

-1320,

500

],

"type": "n8n-nodes-base.stickyNote",

"typeVersion": 1

}

],

"pinData": {},

"settings": {

"executionOrder": "v1"

},

"tags": [],

"versionId": "526403fb-9ba5-41cd-ad7f-a45b829b14c8"

}About the Author

Rhys Fisher

Rhys Fisher is the COO & Co-Founder of Rally. He previously co-founded a boutique analytics agency called Unvanity, crossed the Pyrenees coast-to-coast via paraglider, and now watches virtual crowds respond to memes. Follow him on Twitter @virtual_rf