My friend Johannes, who mentors at 500 Startups, recently cornered me with a complaint: "Why are you still using Demographic personas? You know they don’t work. You need Jobs to Be Done." Having advised many startups and built a successful one himself, he felt that it was more important to know what problem someone was trying to solve, than their age, gender, and location. He gave me an example he uses in presentations to make his point:

Source: https://cxinspiration.blog/get-beyond-sociodemographic-twins-to-shared-experiences-of-life-2328/

Both Prince Charles and Ozzy Osbourne are Male, over 70, from the UK, twice married, wealthy & famous castle dwellers, and yet you should market to them completely differently.

As someone building AI personas at Ask Rally, this hit close to home. We'd been relying heavily on demographics and psychographics – because most synthetic research builds personas that way – but we are constantly searching for ways to improve predictive power.

So we ran an experiment that would either validate our approach or give us a path to improvement.

Digital Twins of Early Adopters

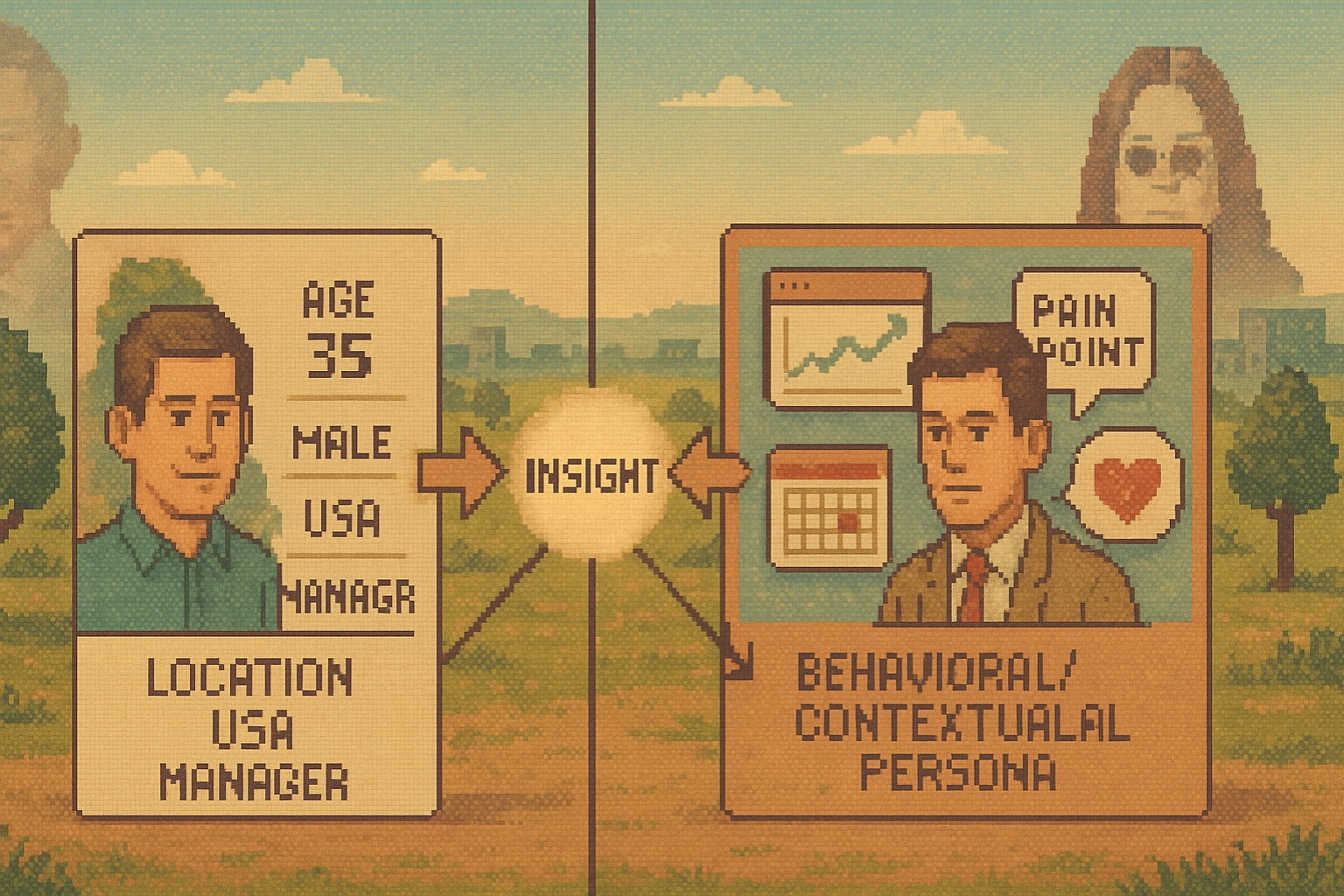

We created 64 synthetic personas from real customer interviews, each containing 25 attributes across five categories:

Demographics (5 traits)

-

Basic identifiers: age, gender, location, occupation, education

-

The traditional foundation of market segmentation

-

What Rally currently uses today

Psychographics (5 traits)

-

Big Five personality model: openness, conscientiousness, extraversion, agreeableness, neuroticism

-

Scored 0-1 to capture personality-driven preferences

-

Also included in Rally personas

Descriptive (5 traits)

-

Professional headlines, personality summaries, biographies

-

Rich narrative content about their professional journey

-

Example: [Persona] has been running a Substack newsletter since August 2023, focusing on developing a community around his content. He is exploring various content formats, including audio and video, to reach a broader audience...

Behavioral (5 traits)

-

Website visits, competitor mentions, traditional research usage

-

Custom solution building, onboarding survey completion

-

What people actually do vs. who they are

Contextual (5 traits)

-

Pain points, constraints, trigger events (Jobs to Be Done framework)

-

Alternative solutions considered, success criteria

-

Why someone needs a solution right now

The experiment was to see what was most important to know about a person if you want to predict their behavior using AI personas. In our case, we attempted to predict who from our initial customer development calls would actually go on to use our product.

The Anatomy of a Persona

Here's what we discovered:

Most AI models suffer from a "positivity bias"—they predict "yes" for everyone when you ask if that person would likely use the product. If you’re using ChatGPT for synthetic research you might as well ask your mother, because they’ll both tell you your startup idea is great. In our test, the baseline model achieved a respectable F1-score (a measure of accuracy) of 0.593 but identified exactly zero true negatives – a useful example of why you can’t just check ‘accuracy’. That's a 100% false positive rate, meaning the simulation said yes even when that person didn’t use the product in real life.

Behavioral features are the only category that say "no" enough. While demographics, psychographics, and descriptive traits all failed to identify enough negatives, behavioral features correctly identified 33 out of 37 non-users—an 89% specificity rate. It’s best to have both behavioral and contextual data. This pairing achieved our best non-optimized performance (F1: 0.617) using just 10 features instead of 25—an 8.1x efficiency improvement. Looking at what the personas said in their thinking process, knowing that someone hadn’t visited the marketing website often was an important predictor.

When you have lots of information, contextual information is the most unique. When we tested feature importance by excluding categories (i.e. everything but that category), the contextual jobs to be done information was the most harmful to remove, because it gave teh most unique information. The behavioral features appeared completely redundant when you had all the other features—their removal caused zero performance change. Furthermore, some categories like psychographics actually harmed accuracy by including them. In ensemble models, precise discriminative signals (like the behavioral traits) get overwhelmed by high-recall features (like the contextual info). It's like trying to hear a violin in a heavy metal concert—the signal is there, but it's drowned out.

Some combinations of features work better than others. Instead of throwing all 25 features at the problem, we tested targeted combinations. The behavioral + contextual combination was the best performer, which makes intuitive sense. What customers do (behavioral) plus why they need a solution (contextual) creates a complete picture. It's the difference between knowing someone visits your pricing page weekly (behavioral) and understanding they have a budget deadline approaching (contextual).

Advanced prompt optimization can squeeze out more performance. We have seen calibration work wonders before in removing bias from LLMs, so we hoped it would remove some of the positivity bias in predicting who would use our product. DSPy's MIPROv2 optimizer ran hundreds of tests of different prompts, pushing our F1-score to 0.640. Proof that how you ask the question matters almost as much as what data you use. The final prompt that worked best was:

Given detailed persona attributes including behavioral

indicators and contextual factors, analyze step-by-step to determine

Rally product adoption likelihood. Provide thorough reasoning connecting

attributes to Rally's value proposition, then output binary prediction.

Here’s the top five strategies that worked across all the things we tested:

This is only one study, and with a limited sample size, so the results are by no means conclusive. However, it’s also super hard to get data like this – most people don’t have both the rich interview transcripts needed to build personas, and the performance data joined to that for actual product usage.

Implementing These Insights at AskRally

This research is directly shaping Rally's product evolution:

-

Our new memory feature lets you input behavioral and contextual data alongside the demographics and psychographics we currently use.

-

We're rethinking and retesting our persona templates to ensure we're collecting the discriminative signals that actually predict adoption.

-

We're validating that these patterns hold across different industries and use cases—what works for us might not work for your product.

If you have similar data or know of a public dataset—customer interviews paired with behavioral outcomes—I'd love to collaborate on expanding this research. Different products, industries, and customer types could reveal whether these patterns are universal or context-specific.

The bias LLMs isn't just a technical problem—it's a fundamental challenge in understanding customers. We’re solving a small part of that challenge with every experiment, with the goal of more accurately simulating real-world responses. AI insights will never be a substitute for talking to customers, but we can do a lot better than asking ChatGPT.