Advertisement creative represents the crucial first impression between potential players and new games, directly impacting acquisition costs and lifetime value. This experiment tests three different compositional approaches for Goblin Quest advertising to understand how framing and focus influence click-through intent and player expectations.

Q4: Advert Preference (Goblin Quest Images)

Mike Taylor

Mike Taylor

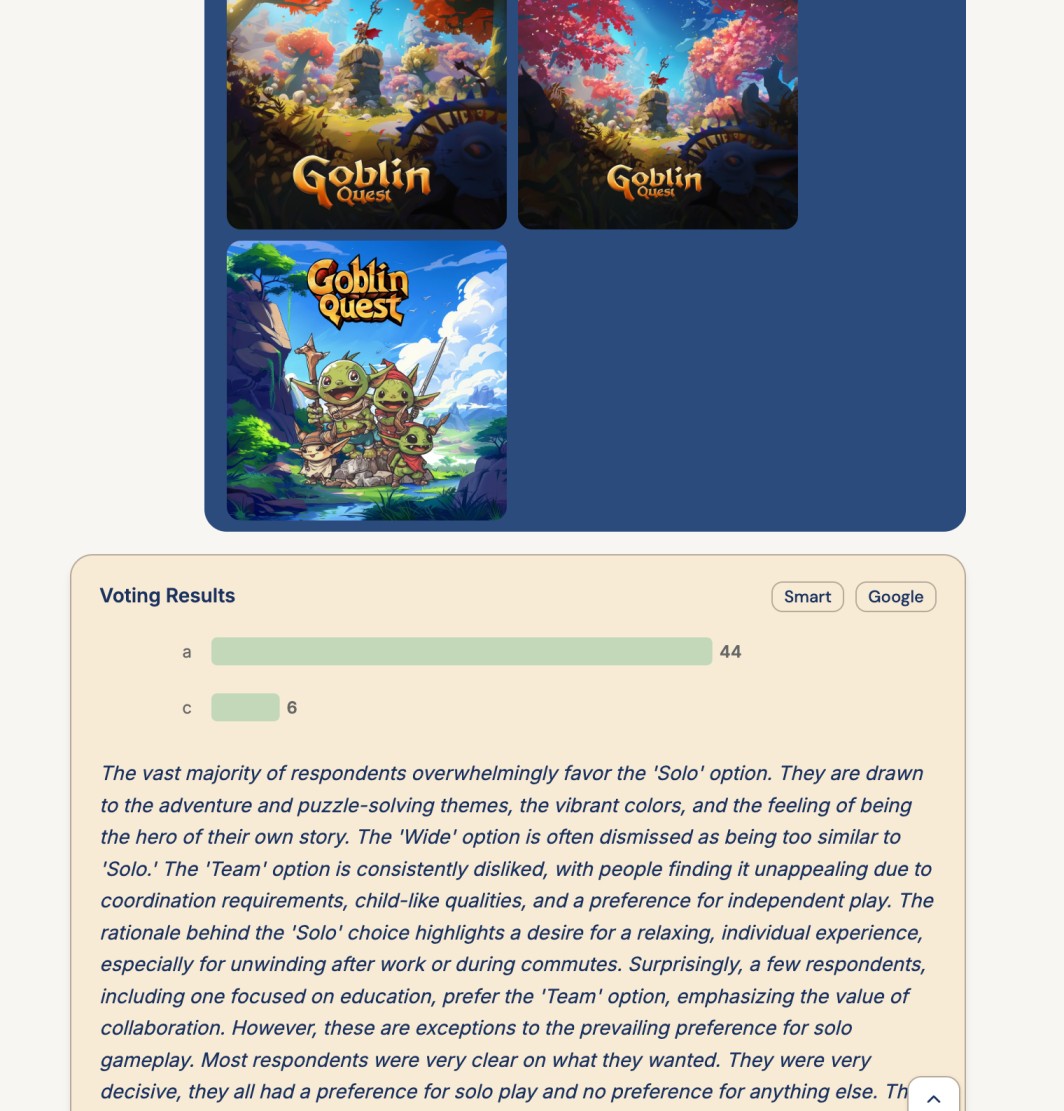

Compared three ad image variations (Solo hero, Wide view, Team shot) to determine which drives higher play intent. This was the only test where AI incorrectly predicted the winner, showing 88% preference for Solo vs 38% human preference, while humans actually preferred Team at 46%.

📊 Hypothesis

"Team-based imagery will outperform solo character advertisements by emphasizing social gameplay elements and collaborative features that appeal to mobile gaming audiences seeking multiplayer experiences."

Introduction

🔬 Methodology

Now we get into some ad testing, and “If you saw an advert featuring the images below, which one would you be more likely to play and why?”. Seeing an ad in the social media feed is often the first encounter a user has with a brand, so it makes a big difference to the affinity users show your brand over their lifetime, which translates directly into earnings.

Data Source: James Cramer, Skunkworks

Audience: US Mobile Games - Core Demographics: Geographic Focus: United States-based mobile game players Age Distribution: Primarily middle-aged gamers, with the largest segment being 35-44 years old (42%), followed by equal representation from 25-34 and 45-54 age groups (20% each) Gender Split: Male-dominated audience (66%) with significant female representation (30%), plus small percentages of non-binary and other gender identities Gaming Preferences: This audience shows diverse gaming interests across multiple genres: Top Categories: Adventure games (11.5%), Strategy (11%), and Role-playing (10%) represent the most popular genres Secondary Interests: Word games (9%), Card games (7%), Trivia (7%), and Simulation games (7%) Action & Casual: Moderate interest in Action (12%), Racing (5.5%), Sports (5.5%) Niche Segments: Smaller but notable groups enjoy Educational (3.5%), Casino (3%), Family (1.5%), and Music games (0.5%)

Simulator: chat

📈 Results

Performance Metrics

Baseline

0%

Optimized

67%

Metric: Alignment (67% achieved)

|

Option |

PickFu (Human) |

Rally |

|

Solo (A) |

38% |

88% |

|

Wide (B) |

16% |

0% |

|

Team (C) |

46% |

12% |

The feedback here helped me understand why the winning variation was selected, which was helpful because I didn’t expect that to be the winner. Apparently the hero theme was favored over the goblin team, and therefore it makes sense that the more zoomed in version of the hero image (Solo vs Wide) performed better, despite being quite similar. We also got further insight into the audience, with the majority of them preferring solo gameplay, which again I hadn’t expected.

🔍 Analysis

Rally nailed the winning Bright Sky theme, showing impressive accuracy (40% vs 42% actual). But here's where it gets interesting—Rally seems biased toward Purple Forest (32% prediction vs 22% reality) while discounting Purple Forest by 5 percentage points – these images were perhaps too similar to identify a significant difference. The takeaway here is to make sure you test bigger differences, particularly with visual assets.

💡 Conclusions

This experiment exposed a significant AI preference divergence, representing Rally's only incorrect winner prediction across all tests. The AI dramatically overindexed on Solo imagery (88% vs 38% reality) while humans actually preferred Team compositions (46% vs 12% AI prediction). This reveals potential blind spots in AI testing, particularly around social gameplay preferences and collaborative themes. The experiment also highlighted how naming conventions can influence results, suggesting the importance of neutral descriptors when conducting synthetic testing. Despite the prediction failure, the feedback helped explain why the actual winner succeeded, providing valuable insights about hero themes and player preferences for solo gameplay mechanics.

🧪 Similar Experiments

Q1: Character Designs for a Conquest MMO (Seaborne)

Character design A/B testing for mobile MMO games: AI vs human preferences comparing realistic...

CalibrationQ2: Theme Preferences (Seaborne)

Mobile game theme testing: AI predicts player preferences for cartoon vs anime vs adventure...

CalibrationQ3: Theme Preferences (Goblin Quest)

Goblin Quest theme testing achieves 98% accuracy: AI vs human preferences for mobile game visual...

CalibrationAbout the Researcher

Mike Taylor

Mike Taylor is the CEO & Co-Founder of Rally. He previously co-founded a 50-person growth marketing agency called Ladder, created marketing & AI courses on LinkedIn, Vexpower, and Udemy taken by over 450,000 people, and published a book with O’Reilly on prompt engineering.