Small stylistic elements can dramatically impact ad performance and download rates in mobile gaming. This experiment examines how subtle variations in character expression and environmental color schemes affect player preference, testing the balance between cheerful accessibility and mysterious intrigue in game presentation

Q3: Theme Preferences (Goblin Quest)

Mike Taylor

Mike Taylor

Tested four subtle theme variations for Goblin Quest combining different goblin designs (friendly vs red-eyed) with different backgrounds (green/blue vs purple). AI achieved remarkable accuracy, predicting Bright Sky winner within 2 percentage points (40% vs 42% human preference).

📊 Hypothesis

"Bright, cheerful visual themes will be preferred over darker, mysterious alternatives in mobile gaming due to their broader appeal and positive emotional associations that drive download decisions."

Introduction

🔬 Methodology

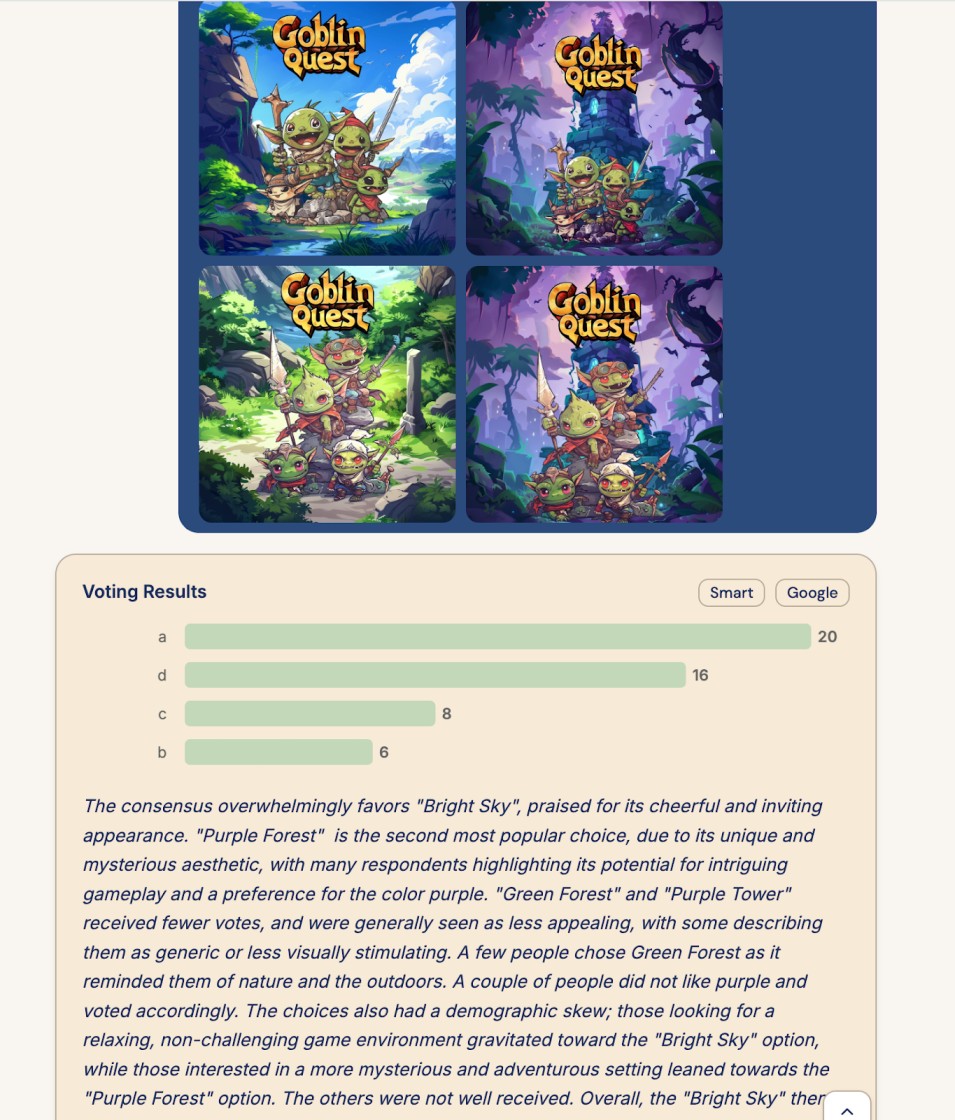

Let’s switch over to a different game, Goblin Quest, where we want to know “Which theme would you most like to play from the four choices below and why?”. These options are different combinations of the same elements, with either the happy friendly looking goblin family or the ones with red eyes, and a green / blue background or a purple one. Small stylistic elements like this can make a huge difference in the performance of your ads and how many people decide to download the game.

Data Source: James Cramer, Skunkworks

Audience: US Mobile Games - Core Demographics: Geographic Focus: United States-based mobile game players Age Distribution: Primarily middle-aged gamers, with the largest segment being 35-44 years old (42%), followed by equal representation from 25-34 and 45-54 age groups (20% each) Gender Split: Male-dominated audience (66%) with significant female representation (30%), plus small percentages of non-binary and other gender identities Gaming Preferences: This audience shows diverse gaming interests across multiple genres: Top Categories: Adventure games (11.5%), Strategy (11%), and Role-playing (10%) represent the most popular genres Secondary Interests: Word games (9%), Card games (7%), Trivia (7%), and Simulation games (7%) Action & Casual: Moderate interest in Action (12%), Racing (5.5%), Sports (5.5%) Niche Segments: Smaller but notable groups enjoy Educational (3.5%), Casino (3%), Family (1.5%), and Music games (0.5%)

Simulator: chat

📈 Results

Performance Metrics

Baseline

0%

Optimized

95%

Metric: Alignment (95% achieved)

|

Option |

PickFu (Human) |

Rally |

|

Bright Sky (A) |

42% |

40% |

|

Purple Tower (B) |

17% |

12% |

|

Green Forest (C) |

19% |

16% |

|

Purple Forest (D) |

22% |

32% |

There was a really interesting demographic breakdown in this analysis, with a split between people who liked Bright Sky for its cheery appearance and those who preferred the mystery of Purple Forest. In my opinion the personas were a little too harsh on Purple Tower considering it was so similar to Purple Forest, but as we have seen these systems tend to have too much conviction in what they like.

🔍 Analysis

Rally nailed the winning Bright Sky theme, showing impressive accuracy (40% vs 42% actual). But here's where it gets interesting—Rally seems biased toward Purple Forest (32% prediction vs 22% reality) while discounting Purple Forest by 5 percentage points – these images were perhaps too similar to identify a significant difference. The takeaway here is to make sure you test bigger differences, particularly with visual assets.

💡 Conclusions

This experiment achieved the highest accuracy of all tests, with Rally predicting the Bright Sky winner within 2 percentage points (40% vs 42%). The AI demonstrated sophisticated understanding of player preferences, correctly identifying the appeal of cheerful imagery over mysterious alternatives. However, it showed bias toward Purple Forest (32% vs 22% actual) while being harsh on Purple Tower, suggesting difficulty distinguishing between similar visual elements. The experiment reinforced that AI testing works best when options are clearly differentiated rather than subtly similar.

🧪 Similar Experiments

Q1: Character Designs for a Conquest MMO (Seaborne)

Character design A/B testing for mobile MMO games: AI vs human preferences comparing realistic...

CalibrationQ2: Theme Preferences (Seaborne)

Mobile game theme testing: AI predicts player preferences for cartoon vs anime vs adventure...

CalibrationQ4: Advert Preference (Goblin Quest Images)

Mobile game ad testing reveals AI bias: Solo vs team character advertisements show divergent...

CalibrationAbout the Researcher

Mike Taylor

Mike Taylor is the CEO & Co-Founder of Rally. He previously co-founded a 50-person growth marketing agency called Ladder, created marketing & AI courses on LinkedIn, Vexpower, and Udemy taken by over 450,000 people, and published a book with O’Reilly on prompt engineering.