Casevo: a Cognitive Agents and Social Evolution Simulator

postZexun Jiang, Yafang Shi, Maoxu Li, Hongjiang Xiao, Yunxiao Qin, Qinglan Wei, Ye Wang, Yuan Zhang

Published: 2024-12-30

🔥 Key Takeaway:

Letting AI personas influence each other and revise their opinions over several rounds—mimicking real social conversations—makes their collective responses more realistic and predictive than simply collecting one-off, independent answers, even if those initial answers are detailed or well-crafted.

🔮 TLDR

Casevo is an open-source multi-agent simulation framework that uses large language models (LLMs) to model complex social behaviors, featuring customizable agents with roles, memory (RAG), and multi-step reasoning (Chain of Thought). The system supports dynamic social networks that evolve during simulation, parallelized processing for scale, and modular scenario setup, making it suitable for simulating real-world processes like elections, opinion shifts, and public debates. In a demonstration using a simulated U.S. presidential debate with 101 agent-voters, Casevo replicated how opinions shift via information exposure, social interaction, and reflection, with results showing dynamic vote swings and changing support as agents discussed and reconsidered policy issues. Key actionable ideas: use group-specific persona templates grounded in real-world typologies, enable memory and agent-to-agent influence across rounds, and model evolving social network ties to capture shifts in opinion and polarization. The framework allows fast scenario configuration, tracks detailed agent state and memory, and can be extended to market research, news diffusion, or any collective decision-making simulation.

📊 Cool Story, Needs a Graph

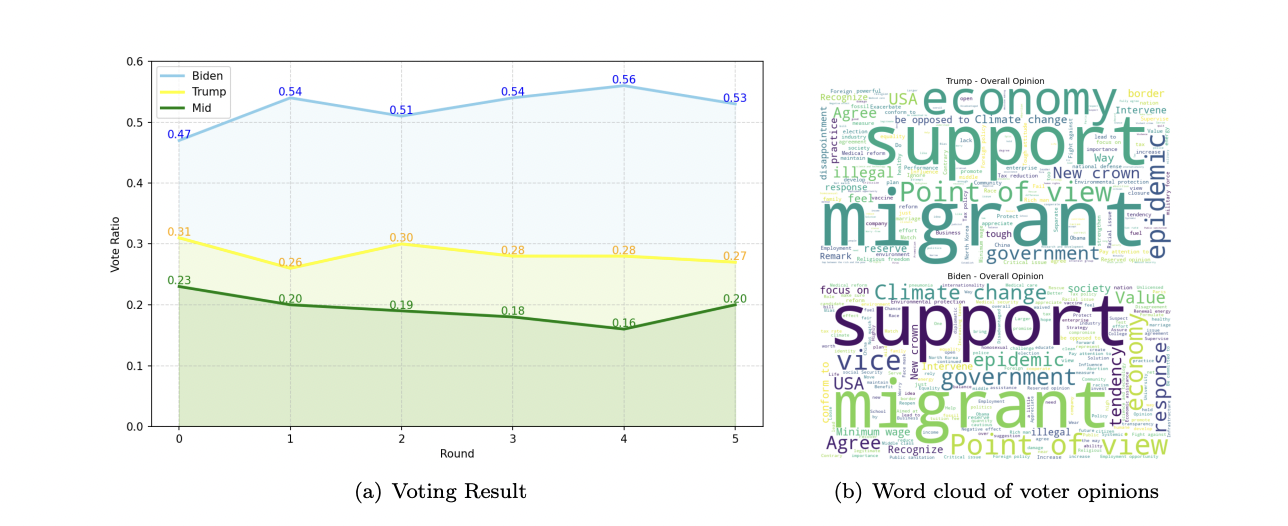

Figure 4: "Simulation Result"

Dynamic voting results and opinion distribution simulated by Casevo.

Figure 4 consists of two side-by-side panels: (a) an overlaid time-series plot showing the proportion of votes for Biden, Trump, and neutral voters across six simulation rounds, and (b) word clouds summarizing the most frequent terms in simulated voter opinions for both candidates. Together, these visuals demonstrate Casevo’s ability to capture the evolving dynamics and polarization of voter preferences during an election debate scenario, offering a clear and immediate comparison to real-world electoral behavior and highlighting the contrast between simulated collective opinion shifts and static baseline assumptions.

⚔️ The Operators Edge

A detail that many experts might overlook in this study is the use of a retrieval-augmented memory mechanism (RAG) for AI personas, which enables each agent to access and reflect on its own past decisions and the content of previous social exchanges, not just the immediate prompt. Instead of treating every simulated response as a standalone event, Casevo agents continuously draw on a personal memory of past discussions and experiences—much like a real person would. This historical context is what allows their opinions to evolve authentically over multiple rounds, leading to more realistic social dynamics and emergent group patterns.

Why it matters: Most AI-driven research or testing workflows rely on “stateless” agents that forget everything between questions. But in real social settings, people’s responses are shaped by what they’ve already seen, heard, or debated. By embedding memory, the simulation captures both inertia (resistance to change) and momentum (opinion swings) that only emerge when individuals remember and react to their own and others’ history. This helps the synthetic audience mirror real-world shifts, polarization, and the way messaging “sticks” or fades over time—a key lever for predictive accuracy.

Example of use: In a synthetic product launch test, a brand manager could have AI personas “remember” recent marketing claims, competitor actions, or personal purchase history before responding to new ad concepts. This would reveal not just first impressions, but also how repeated exposures or accumulated frustrations change attitudes—surfacing long-term effects like brand fatigue or trust-building that aren’t visible in single-shot surveys.

Example of misapplication: If a research team skips the memory feature and treats every AI persona response as an isolated event, they might see high initial interest for a new product or campaign, but completely miss subsequent backlash, skepticism, or evolving preferences that would appear if consumers were exposed to multiple rounds. This could lead to overestimating launch success, missing the warning signs of potential backlash, or failing to identify slow-building loyalty—because the simulation doesn’t allow for past context to shape future reactions.

🗺️ What are the Implications?

• Build simulated audiences using real-life segmentation frameworks: The study created voter personas based on actual political typologies from Pew Research, rather than generic or random categories. Market researchers can improve study realism by grounding synthetic samples in published segmentation models or customer personas from their industry.

• Let simulated agents interact and evolve their opinions: Instead of collecting one-off responses, this approach had AI personas discuss, reflect, and update their choices over multiple rounds. For brand or concept testing, using sequential or conversational surveys will better capture how opinions might shift in the real world.

• Include memory and history in virtual panels: Agents in the simulation remembered both their own past responses and what others said, which led to more realistic, context-aware feedback. Enabling your synthetic audience to “recall” previous campaign exposures or product experiences can make the simulated insights more predictive.

• Model the structure of real social networks: The simulation connected agents using a “small-world” network, mirroring how influence spreads in actual communities. For market research, consider mapping or simulating social connections (e.g., peer groups, influencer-follower dynamics) to better predict word-of-mouth and trend adoption.

• Track changes over time instead of just snapshots: The most valuable insights came from watching how support and sentiment evolved across several campaign rounds, not just a single survey. Running longitudinal or repeated synthetic studies can reveal shifts in brand favorability, message fatigue, or emerging customer needs.

• Use flexible, modular study frameworks: The Casevo platform made it easy to reconfigure agent roles, memory, and interaction rules for new business questions. Investing in modular research tools lets you adapt quickly to changing market hypotheses or testing needs without starting from scratch.

📄 Prompts

Prompt Explanation: The AI was prompted to simulate voters as agents, each with detailed personas, to process debate information, interact with other agents, reflect using memory, and make voting decisions in a social network simulation of the 2020 US presidential election.

Agents play the role of voters to watch the debate, generate ideas or viewpoints based on their personas, and then conduct simulated voting. In addition, agents can interact and share their thoughts with each other, simulating the exchange of opinions and interactions between voters in reality.

At the beginning of each round, candidates broadcast their policy ideas and campaign concepts to voters through the TV debate. At this stage, all agents receive the corresponding transcripts of the debate simultaneously. Each voter processes this information according to his or her inclinations and personality traits to form an initial impression of the candidate.

Agents interact and exchange opinions with their neighbors (other agents in their social circle) through social networks. Each agent will not only share their opinions about the debate content, but will also receive ideas and feedback from others.

At the end of each round, agents entered a reflective phase. At this point, each agent retrieves and reflects on its short-term memory based on the previous information and the discussion content, and further adjusts its views by combining them with past historical experiences. Through this built-in reflective mechanism, agents not only rely on the current discussion, but also synthesize their experiences and memories over time. This stage helps agents make more solid and rational decisions, especially when they are confronted with complex social issues or policy controversies.

After reflection, agents make their final voting choices based on the results of the reflection. Each agent’s voting decision is directly influenced by the previous phases, including the effectiveness of the candidate’s speech, the flow of opinions in the social network, and their own reflective process. The system records each voter’s voting choice, providing data for status updates in subsequent rounds and for the final results tally.

⏰ When is this relevant?

A financial services company wants to test how different customer types would respond to a new fee-free checking account with digital budgeting tools. They want to simulate a series of qualitative interviews with three realistic audience segments: young professionals new to banking, middle-aged families focused on saving, and retirees who value simplicity. The goal is to understand what features and messages drive interest or hesitation across these groups.

🔢 Follow the Instructions:

1. Define audience segments: Create three AI persona profiles:

• Young professional: Age 25, urban, tech-savvy, just started first full-time job, values flexibility and digital tools.

• Middle-aged family: Age 44, suburban, two kids, looks for ways to save money, cares about security and family benefits.

• Retiree: Age 68, rural, on fixed income, prefers simple options and in-person support, cautious about new technology.

2. Prepare the prompt template for interviews: Use this template for each persona:

You are a [persona description].

Here is a new checking account offer from our bank: “No monthly fees, access to a digital budgeting app, and personalized spending insights. All features available through our mobile and web app. Open to all new customers.”

You are being interviewed for feedback. Please answer as yourself, naturally and honestly, in 3–5 sentences.

First question: What is your first impression of this offer?

3. Generate initial responses: For each persona, run the prompt above through an AI model (like GPT-4) multiple times (at least 5–10 per persona) to simulate diverse customer reactions.

4. Ask follow-up questions: Based on the initial responses, use realistic follow-ups such as:

• “Would you consider switching to this account? Why or why not?”

• “What would make this offer more appealing to you?”

• “Do you have any concerns or questions about using digital budgeting tools?”

Run these for each persona as a second round and capture the responses.

5. Thematic tagging and comparison: Review and tag responses with themes like “interest in digital tools,” “concerns about security,” “fees and savings,” “preference for simplicity,” or “hesitance about technology.”

6. Summarize results by segment: Compile a short summary for each audience group, highlighting which features or messages drove positive reactions, which concerns were most common, and any clear differences between the groups.

🤔 What should I expect?

You’ll get a practical, segment-by-segment view of what aspects of the new banking product resonate or create hesitation for different customer types. This helps prioritize which features to emphasize in marketing, where to address concerns in communications, and which segments may need tailored onboarding or support if the product launches more broadly.