Nadine: an Llm-driven Intelligent Social Robot with Affective Capabilities and Human-like Memory

postHangyeol Kang, Maher Ben Moussa, Nadia Magnenat-Thalmann

Published: 2024-05-30

🔥 Key Takeaway:

The more “human-like” you make your AI personas—by incorporating memory, emotions, and personality traits—the more nuanced and contextually rich their responses become. This results in interactions that are more natural, empathetic, and continuous, rather than merely echoing your expectations. While the AI personas may not necessarily surface unexpected objections, hesitations, or emotional resistance that real customers would raise, the added complexity certainly enhances the depth and empathy of their responses.

🔮 TLDR

This paper introduces a social robot system, Nadine, powered by a Large Language Model (LLM) and designed to simulate human-like interactions. This is achieved by integrating three main modules: perception (using sensors to track users, recognize faces and emotions, and process speech), an LLM-based interaction module (SoR-ReAct), and robot control (generating speech, gestures, and facial expressions). The system employs retrieval-augmented generation (RAG) to draw on relevant knowledge and episodic memories for each user, facilitating context-aware and personalized responses. Additionally, it features an affective system that models emotions, moods, and personality in a 3D space (Pleasure-Arousal-Dominance), allowing the robot to adjust its behavior based on both short-term emotional context and long-term personality traits. Ablation studies reveal that the inclusion of tool use (internet, news, weather, Wikipedia), affective simulation, and long-term memory each enhance the robot’s naturalness, empathy, and continuity in interaction. Key actionable insights include: (1) the combination of RAG for both static knowledge and dynamic user-specific memory, using adaptive chunking for episodic data; (2) modeling affective states with a dimensional approach and updating them based on user interactions; (3) structuring prompts to interleave memory, perception, tool outputs, and emotion; (4) utilizing ablation to measure the impact of each module. The approach has been shown to foster more engaging, contextually relevant, and emotionally intelligent AI personas. While the technical components of this system could potentially be applicable to virtual audience or market research simulations, further studies would be required to confirm this applicability.

📊 Cool Story, Needs a Graph

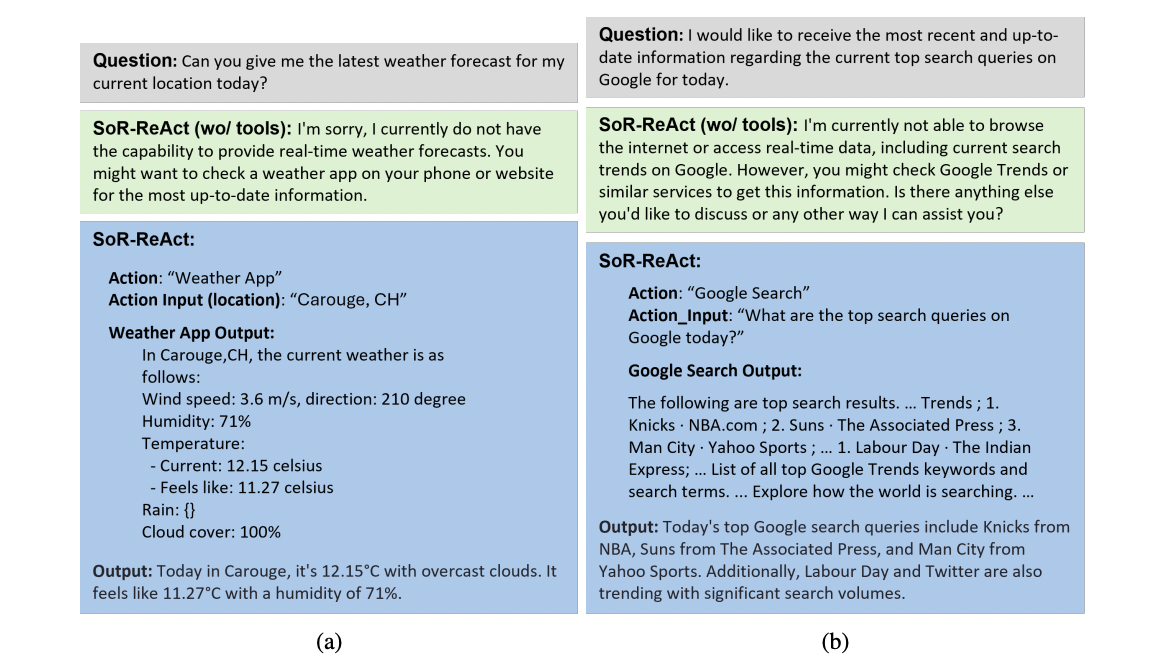

Figure 8: "Ablation of the tool use capability of SoR-ReAct (a): Weather app, (b): Google search"

Comparison of SoR-ReAct responses with and without tool-use capability for real-time queries.

Figure 8 presents a side-by-side comparison of the SoR-ReAct agent's answers to user queries about weather and trending Google searches, contrasting outputs generated with and without access to external tool APIs. In each case, the upper response shows the baseline agent limited to model-internal knowledge (unable to provide up-to-date or location-specific information), while the lower response demonstrates the enhanced agent retrieving and integrating current data from external sources. This clear juxtaposition illustrates the practical impact and added value of tool-use integration over the baseline, making it compelling for understanding the distinct contributions of each system variant.

⚔️ The Operators Edge

A detail that most experts might overlook is how the system handles long-term memory retrieval for each AI persona: instead of breaking up past interactions or knowledge into evenly sized, fixed chunks (as is common in many RAG setups), the Nadine system uses an adaptive, sliding window splitter that groups conversations into contextually meaningful segments based on the natural flow of dialogue (see page 7). This approach preserves the semantic integrity of each memory, letting the AI recall relevant details as a human would—based on context, not arbitrary length.

Why it matters: The structure and segmentation of memory directly affect how much nuance, continuity, and relevance the AI can bring into new interactions. If memory is chunked poorly, the AI either loses important context or retrieves irrelevant fragments, making responses shallow or incoherent. By adaptively grouping exchanges, the system ensures that when a persona ""remembers"" something, it's drawing on real conversational units—so simulated personas can reference specific past details, build rapport, and show continuity across sessions, all of which make synthetic research more credible and lifelike.

Example of use: In an AI-driven product testing scenario, a virtual “returning customer” persona can recall not just that they complained last month, but the exact issue and how it was handled, referencing it in a way that feels authentic: “Last time, I mentioned problems with the checkout process—it looks like that’s been improved now.” This lets researchers see how loyalty, concerns, or satisfaction evolve over time, just like with real longitudinal user studies.

Example of misapplication: If a research team used a naive, fixed-size chunking method for their AI audience’s memory (e.g., every 500 characters or every 2 messages), the AI might “recall” only fragmented or incomplete statements (“Your last order was… [cut off]”) or miss the connection between a user’s complaint and its resolution. This leads to robotic, disconnected responses, undermining both user realism and the ability to simulate long-term customer journeys or brand relationships.

🗺️ What are the Implications?

• Incorporate memory and past context into simulated personas: Experiments show that virtual agents are more realistic and consistent when they have access to prior interactions or user-specific ""memories,"" rather than treating every question as a blank slate.

• Add emotional intelligence to your simulations: Simulated personas that can recognize, track, and respond with emotions (not just facts) produce responses that feel more authentic and human-like, which is especially valuable for studies on brand perception, customer experience, or concept testing.

• Leverage external, real-time data sources when relevant: Integrating tools that fetch up-to-date information (e.g., news, weather) allows simulated studies to react to current events or conditions, enhancing the timeliness of the outputs. However, the direct impact of these tools on the credibility of business decisions or market research has not been empirically validated.

• Design prompts and study flows to mirror real human interactions: Results improve when virtual audiences are queried using prompts that include relevant context (recent conversation, current user emotion, situational details), rather than isolated or generic questions.

• Test the impact of each “module” or feature in your simulation: Simple “ablation” studies—comparing outputs with and without memory, emotion, or tool-use—can identify which elements actually improve realism and insight, helping focus investment on what matters.

• Don’t overcomplicate: start with the basics and layer on sophistication: Even adding one or two features like memory recall or emotion tracking makes a measurable difference; you don’t need a perfect simulation to see value, but incremental improvements can boost confidence in the findings.

📄 Prompts

Prompt Explanation: The core persona prompt for Nadine, the social robot, instructs the AI to act as a humanoid social robot using multimodal context, memory, perception, tools, and affective reasoning in its interactions.

Your name is Nadine and you are a humanoid social robot

Your current situation at the physical world at this moment can be described as follows:

- You are currently sitting at a room at a building...

[Description of the physical world of the robot]

[Last messages from the current interaction with the user]

When you are interacting with humans, always make sure to act by the following rules:

- ALWAYS answer as yourself, never as someone else or as a representative as Nadine

[Instructions how to behave as Nadine]

TOOLS

You can use the tools to look up information that may be helpful in answering the human original question. The tools can be used:

[List of tools and how to use them]

KNOWLEDGE BASE

Use the RAG retrieval tool to retrieve knowledge or long-term memory information from the human question then use them to answer the question.

[Memories relevant to the user question]

RESPONSE FORMAT INSTRUCTIONS

When prompting, please output a response in one of two formats:

*Option 1:* Use this if you want to use a tool. Markdown code snippet formatted in the following schema:

JSON {...} //reason for using tools

*Option 2:* Use this if you want to respond directly to the human. Markdown code snippet formatted in the following schema:

JSON {...} //reason and answer

CONTEXTUAL INFORMATION

- You are currently speaking with: [User]

- Live data from the system and the perception components

HUMAN'S INPUT

Here is the human's input (remember to respond with a markdown code snippet of a json blob with a single action, and NOTHING else. Remember also that your answer to the human should comply to the rules described below under the section 'RULES' and please never repeat yourself):

[User utterances received from the speech recognition]

⏰ When is this relevant?

A consumer banking provider wants to test customer reactions to a new digital account feature that automatically categorizes spending and gives monthly financial health tips. The goal is to simulate qualitative feedback from three segments: digitally savvy young professionals, cost-conscious retirees, and busy middle-aged parents. The team wants to uncover which benefits or concerns matter most for each group, and how messaging could be tailored.

🔢 Follow the Instructions:

1. Define audience segments: Write a short persona profile for each target group. Example:

• Digitally savvy young professional: 29, urban, uses mobile banking daily, values convenience, confident with tech.

• Cost-conscious retiree: 67, suburban, limited tech use, tracks spending carefully, wants security and clarity.

• Busy middle-aged parent: 42, two children, juggles work and family, values tools that save time, moderate tech comfort.

2. Prepare prompt template for persona simulation: Use the following for each run:

You are simulating a [persona description].

Here is the new bank feature: ""Our digital account now automatically categorizes your spending (like groceries, dining, bills), and at the end of each month, sends you a simple financial health summary plus personalized tips to improve your money habits.""

You are being interviewed by a market researcher. Respond as this persona in 3–5 sentences, sharing your honest first impression, what you like or dislike, and whether you see yourself using this feature.

The researcher may ask follow-up questions based on your answer. Respond only as the persona.

First question: What is your first reaction to this new account feature?

3. Generate initial responses: For each persona, run the prompt through the AI model to get 8–12 simulated responses (consider rephrasing the question for variety, e.g., ""Does this sound useful to you?"" ""What might you worry about?"" ""How would this help or hinder your budgeting?"").

4. Add follow-up prompts: Based on the initial answers, ask 1–2 relevant follow-up questions for each persona. For example, ""What would make you trust these tips?"" or ""Would you share these insights with your spouse or children?"" Capture these as a short dialogue.

5. Tag and cluster feedback: Review all responses and assign simple tags like ""privacy concern,"" ""time-saving,"" ""skeptical,"" ""helpful for budgeting,"" ""confusing,"" or ""motivates action.""

6. Compare by audience type: Summarize what each segment cares about (e.g., young professionals mention app integration, retirees mention security, parents mention simplicity). Look for any unique objections or positive reactions.

🤔 What should I expect?

You’ll get a clear, directional understanding of how each customer group is likely to perceive and react to the new feature, what benefits to emphasize for each, and where to focus further messaging or product refinement before rollout or live testing.