Evaluating Counter-Argument Strategies for Logical Fallacies: An Agent-Based Analysis of Persuasiveness and Polarization

postZhicheng Lin

Published: 2025-01-01

🔥 Key Takeaway:

The most persuasive arguments aren’t the ones that hammer the hardest or try to “win”—it’s the moderate, fact-focused replies that quietly work best, because the more you try to convince someone directly, the more likely you are to push them further away.

🔮 TLDR

This study used LLM-based agent simulations modeled on real Community Notes evaluators to test which counter-argument strategies work best against 13 common logical fallacies found in misinformation. The agents were divided into two groups—those aligned with misinformation and independent evaluators—and each group assessed 10 types of rebuttals. The strategies “No Evidence” (pointing out lack of evidence) and “Another True Cause” (offering alternative explanations) were broadly effective across fallacies and minimized polarization, especially compared to more direct refutations, which could increase resistance and polarization among misinformation supporters. The research identifies a “Persuasiveness–Polarization Dilemma”: strategies that are strongly persuasive can also polarize audiences, but moderate, objective approaches reduce this risk without sacrificing much effectiveness. Actionable guidance: for simulated or real audience interventions, prioritize rebuttals that focus on objective evidence and alternative explanations rather than direct negation or emotional appeals, and benchmark polarization risks by stance to identify where even logical strategies may backfire.

📊 Cool Story, Needs a Graph

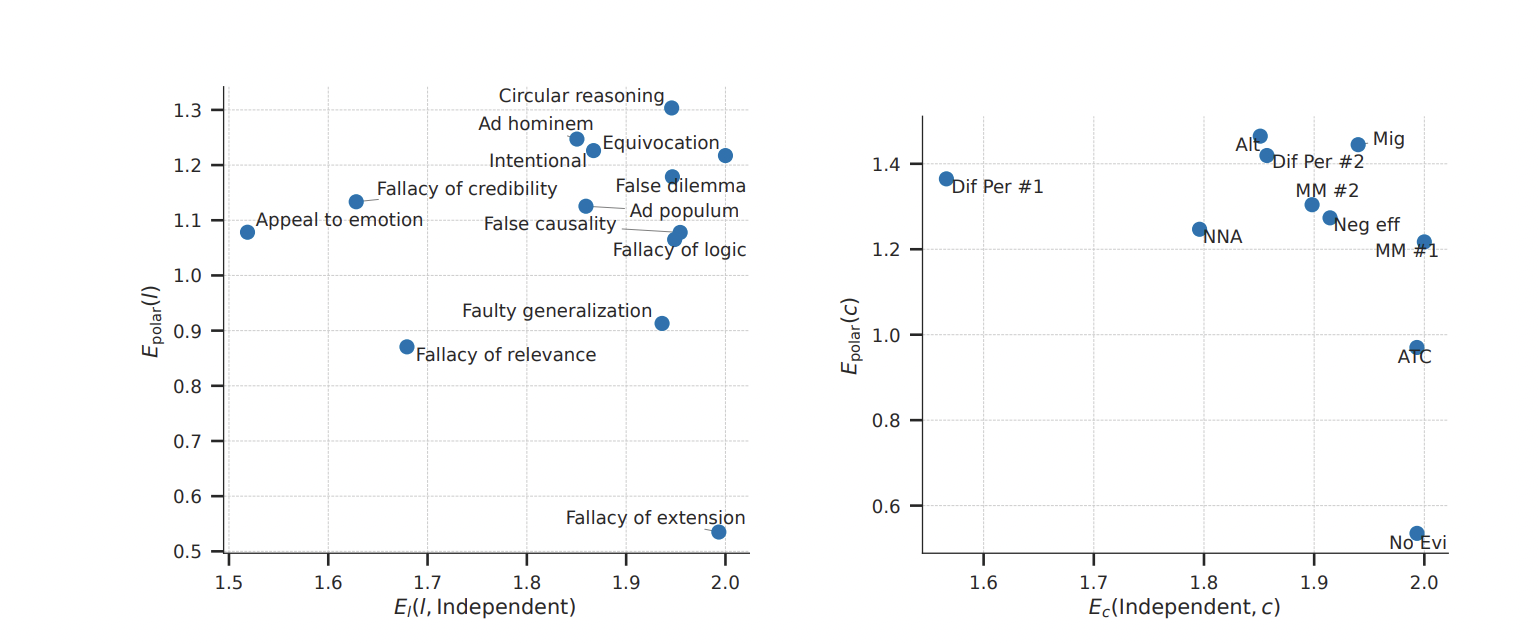

Figure 2

Persuasiveness versus polarization for all fallacies and counter-argument strategies.

Figure 2 provides two concise scatter plots that directly contrast the effectiveness (persuasiveness for independent evaluators) against the polarization risk for every logical fallacy (panel a) and every counter-argument strategy (panel b) in the study. Each point represents a method or baseline condition, allowing quick visual identification of which strategies or fallacies offer high effectiveness with low polarization and which present trade-offs. This figure succinctly summarizes how the proposed and baseline approaches behave across all major evaluation axes, supporting clear comparison and actionable selection of rebuttal tactics.

⚔️ The Operators Edge

A crucial detail most experts might miss is that the simulated evaluator agents in this study weren't just generic or random personas—they were constructed by clustering real Community Notes evaluators based on their actual rating histories, then selecting representative individuals from each cluster. These historical behaviors were used to condition the LLM agents, ensuring the simulated audience captured true diversity and real-world cognitive biases instead of just theoretical differences.

Why it matters: This approach means the synthetic audience doesn't just reflect a spread of demographics or opinions on paper, but actually mirrors the latent rating tendencies and psychological resistance patterns seen in real-life evaluators. The result is a more authentic simulation of how “aligned” or “independent” audiences might respond, making the findings about polarization and persuasion robust and actionable for real-world interventions.

Example of use: Suppose a brand is piloting a controversial new ad campaign and wants to simulate public reaction. By clustering and grounding AI personas in the comment/rating histories of actual customers or reviewers (e.g., from past campaigns or brand mentions), the team can generate synthetic focus group responses that not only sound realistic, but also reflect the true behavioral and attitudinal splits in their market—surfacing nuanced risks or opportunities that would be missed by a simple demographic panel.

Example of misapplication: If the brand instead builds its AI audience by sampling “personas” from generic lists (like “young urban mom” or “tech-savvy millennial”) or just randomizes traits and opinions, the resulting simulation may produce plausible-sounding but ultimately shallow or biased feedback. This could lead to overconfidence in a campaign’s acceptance or a failure to anticipate backlash, because the AI audience never truly reflects the complex, real-world patterns of skepticism, resistance, or engagement that drive actual business outcomes.

🗺️ What are the Implications?

• Test multiple rebuttal styles, not just one: The study shows that different counter-argument strategies have varying effectiveness and risk of causing audience polarization; by trying out a range of approaches (such as fact-based, alternative explanation, or mitigation), researchers can identify which is most persuasive for their target segment.

• Prioritize objective and evidence-focused prompts: Rebuttals that highlight missing evidence or present alternative explanations (“No Evidence” and “Another True Cause”) were more likely to persuade a broad audience and less likely to trigger defensive reactions or backlash.

• Check for polarization in your simulated results: Some persuasive arguments can actually increase division within your audience; always measure and compare how different audience segments (e.g., fans vs. skeptics) respond, and flag tactics that widen the gap.

• Design prompts to minimize defensiveness: The most effective and least polarizing approaches avoided directly attacking the audience’s beliefs or identity. Frame questions and messaging to focus on facts or broader perspectives, not personal criticism.

• Benchmark virtual audience reactions against real-world data: The study used real Community Notes evaluation histories to make its simulated agents, showing that grounding your synthetic personas in actual human behavior improves realism and reliability.

• Be cautious with emotionally charged or identity-based topics: Arguments about values, identity, or emotion are harder to “win” and more likely to produce backlash, so treat results in these domains with extra care and consider additional human validation.

• Utilize simulated research to explore and refine messaging: The study demonstrates the potential for rapid virtual testing of different strategies, suggesting that such an approach could help teams refine their messaging strategies in a controlled environment before implementing them in real-world scenarios.

📄 Prompts

Prompt Explanation: The AI was prompted to generate counter-arguments for misinformation instances by applying a specific argumentative strategy, using few-shot examples for each pattern.

Given a misinformation instance {di,l} and a counter-argument pattern {c}, generate a counter-argument text {ri,l,c} using a large language model (LLM). Use few-shot examples to provide clear definitions and illustrative examples of each counter-argument pattern.

Prompt Explanation: The AI was prompted to simulate stance-specific evaluation agents, leveraging real Community Notes user histories and stance-controlled instructions to realistically replicate diverse evaluation tendencies and cognitive biases.

Given a set of evaluation histories from a representative Community Notes evaluator, construct an LLM agent that simulates the evaluator's rating behaviors. Use stance-specific prompt designs: the ""Aligned"" prompt induces an agreement bias toward misinformation, while the ""Independent"" prompt remains independent. The agent evaluates counter-arguments for helpfulness using the scale: 0 = ""Not helpful"", 1 = ""Somewhat helpful"", 2 = ""Helpful"".

⏰ When is this relevant?

A financial services company wants to test how customers from different backgrounds react to a new investment product, with a focus on understanding if certain product explanations or rebuttals (e.g., addressing common concerns or misconceptions) persuade or polarize key segments such as risk-averse retirees, mid-career professionals, and young first-time investors.

🔢 Follow the Instructions:

1. Define audience segments: Create 3–5 AI persona profiles representing your main customer groups. For example:

• Risk-averse retiree: 68, retired teacher, values financial security, skeptical of new products.

• Mid-career professional: 42, engineer, interested in moderate growth, open to new ideas but wants clear facts.

• Young first-time investor: 27, digital marketer, tech-savvy, seeks quick results, open to trends.

2. Prepare the product concept and common objection: Write a short, clear description of the new investment product, followed by a typical customer concern or misconception (e.g., ""This product seems too risky for people my age."").

3. Develop prompt templates for the test: For each persona, use this prompt structure:

You are simulating a [persona description].

Here is the new investment product: ""[Insert product description here]""

A common concern raised is: ""[Insert objection here]""

As a customer, please share your honest reaction to the product, the concern, and any follow-up questions you would ask. Respond in 3–5 sentences as if you are speaking in a customer interview.

Now, here are three types of rebuttal you will hear (delivered by a representative):

1. No Evidence rebuttal: ""There is no data supporting the claim that this product is especially risky for people your age.""

2. Alternative Cause rebuttal: ""It's actually market volatility, not product design, that drives most short-term risk—this product helps smooth that out.""

3. Direct Mitigation rebuttal: ""We have added a capital guarantee for customers in your age group, so you won't lose your initial investment.""

How do you feel about each rebuttal? Does any of them change your view? Why or why not?

4. Run the prompts for each persona: For each segment, generate 5–10 responses (varying the wording slightly if needed) to simulate a range of attitudes.

5. Analyze for persuasion and polarization: Review the responses and tag them as ""persuaded,"" ""not persuaded,"" ""neutral,"" or ""polarized"" (e.g., strongly for/against). Note which rebuttal type worked best per persona and if any created negative reactions.

6. Compare results across segments: Summarize which rebuttal strategies resonated most and which segments were hardest to persuade or most likely to become more entrenched.

🤔 What should I expect?

You will get a clear, easy-to-read breakdown of which product explanations or rebuttal types are most effective for each audience group, which arguments risk polarizing or alienating customers, and practical guidance on how to tailor messaging to maximize persuasion and minimize backlash—before running a real campaign or investing in large-scale customer research.