Large Language Models as Psychological Simulators: A Methodological Guide

postXiangyang He, Jiale Li, Jiahao Chen, Yang Yang, Mingming Fan

🔥 Key Takeaway:

The more you try to make your synthetic audience “realistic” with elaborate backstories or demographic detail, the less human-like its answers become—what actually closes the gap is testing your prompts in lots of weird, unexpected ways (typos, rephrasing, even mistakes), because it’s the model’s brittleness and surprising failures, not its depth of simulation, that best reveal what real people might say.

🔮 TLDR

This paper provides a practical framework for using large language models (LLMs) as psychological simulators, highlighting two main applications: simulating human roles/personas and modeling cognitive processes. Key actionable guidelines include: choose and document model versions carefully, as results differ dramatically across models and fine-tuning (e.g., RLHF) can introduce systematic biases; base personas on psychologically rich information (not just demographics) and validate outputs against real human data, especially for time-sensitive topics; systematically test prompt variations to ensure robustness, as models can be extremely sensitive to wording or language; analyze full response distributions rather than just averages, since LLMs often reduce human-like diversity and default to “correct answers”; and use customization (conditioning, fine-tuning) on real human datasets to boost alignment. Models cannot capture recent trends or events beyond their training cutoff, and may overrepresent WEIRD (Western, Educated, Industrialized, Rich, Democratic) perspectives and mainstream voices. Ethical risks include bias amplification, misrepresentation of marginalized groups, and lack of consent in training data; transparent reporting, community engagement, and independent validation are recommended. Overall, LLM-based simulations are best used for prototyping, hypothesis generation, or studying inaccessible populations, but always require human benchmarking and explicit attention to limits, bias, and ethical implications.

📊 Cool Story, Needs a Graph

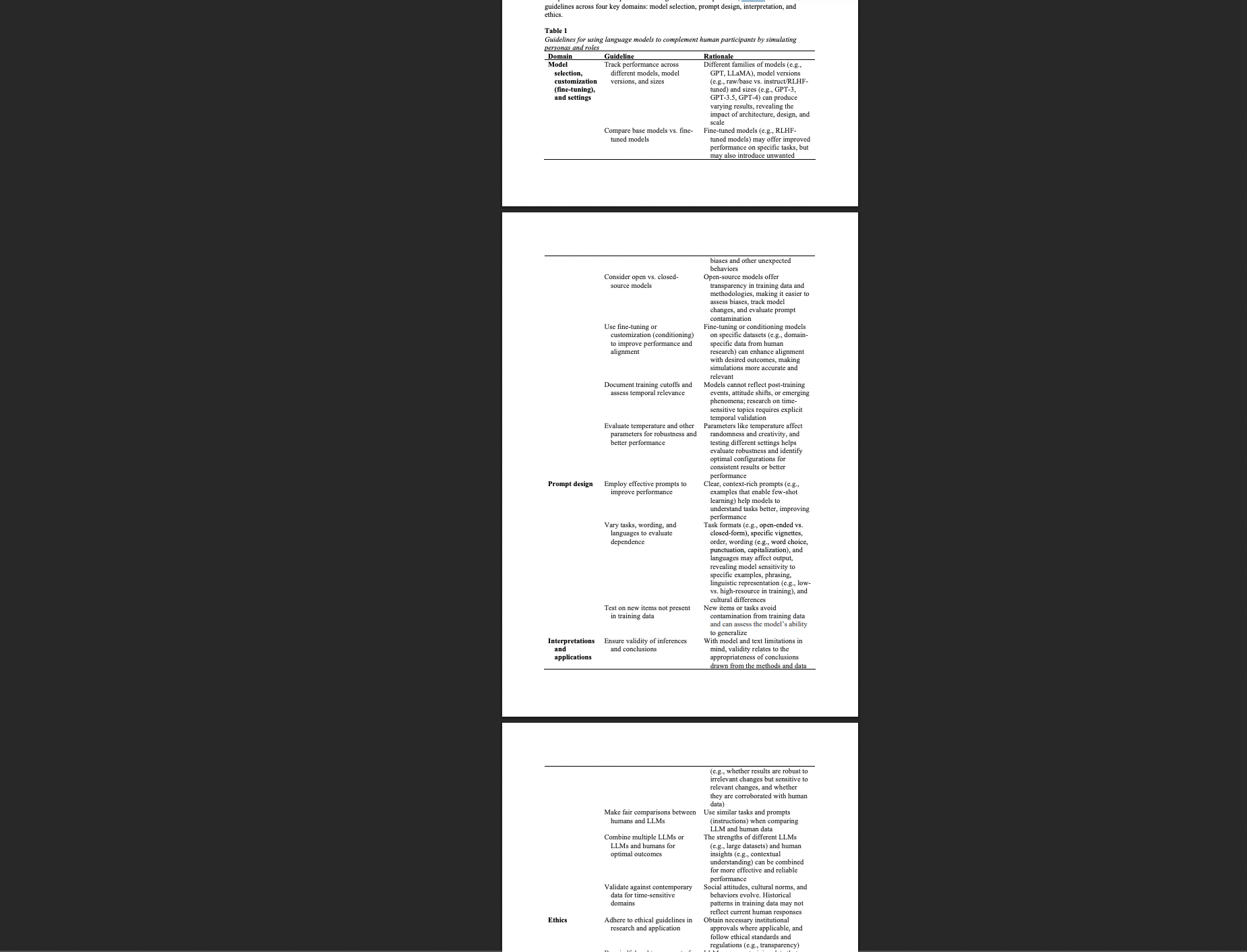

Table 1: "Guidelines for using language models to complement human participants by simulating personas and roles"

Methodological guidelines contrasting LLM simulation best practices with traditional and naive baselines.

Table 1 (page 6) presents a four-column matrix mapping out the critical domains of LLM-based persona simulation—model selection/customization, prompt design, interpretation/applications, and ethics—listing specific actionable guidelines and the rationale for each. This side-by-side format enables direct comparison between the paper’s recommended simulation framework and common baseline or naive practices, making clear where the proposed method diverges from or improves on traditional market research and AI simulation protocols. The table highlights the importance of model/version tracking, psychological grounding beyond demographics, robust prompt design, temporal validation, and explicit ethical auditing, offering a concise reference for experiment design and quality control.

⚔️ The Operators Edge

A detail that most experts might overlook is how the study repeatedly emphasizes the necessity of testing prompt variations—changing wording, order, language, and even including small “errors” like typos—when running synthetic persona experiments. Far from being a trivial robustness check, this strategy exposes the surprising brittleness of LLM outputs and reveals which insights are actually robust to real-world variability in how questions get asked.

Why it matters: Many practitioners focus on crafting the “perfect” prompt or persona, assuming that once you get a strong response, you can trust it as a proxy for human data. But the study shows (see Table 1, p. 6–7 and discussion p. 7–8) that LLMs are acutely sensitive to even tiny prompt changes, sometimes flipping their answers or collapsing diversity altogether. By intentionally varying prompts and observing which findings hold up, you uncover which insights are genuinely stable and which are just artifacts of wording. This is a hidden lever that separates reliable, actionable results from those that would crumble if a real customer or user phrased a question in a slightly different way.

Example of use: Suppose a market research team is testing slogans for a new soda. Instead of using one carefully-written survey prompt for their AI personas, they generate 10–20 variants (“What do you think of this slogan?” “How does this line make you feel?” “Would you buy a drink with this tagline?” etc.) and check whether positive/negative sentiment or preference rankings are consistent across all. If a finding persists, it’s much more likely to generalize to real-world conversations, where buyers will each interpret and ask in their own way.

Example of misapplication: A product team runs a synthetic A/B test with a single prompt per concept (“How appealing is this name for a new app?”), gets a clear winner, and uses it for launch messaging. But they never check how results change if the question is rephrased, if slang or typos are introduced, or if the persona is slightly tweaked. When the real-world campaign underperforms, it turns out the AI’s “confidence” was just prompt sensitivity—what looked like a robust preference was actually an artifact of overly narrow testing.

🗺️ What are the Implications?

• Invest in high-quality, context-rich prompts before running your study: The way questions are asked and the detail included in your instructions (so-called “few-shot” or example-driven prompts) directly impacts the realism and accuracy of synthetic audience responses. Spending time to set up clear, relevant, and scenario-based instructions pays off more than upgrading the AI model.

• Validate your simulated results with real human data whenever possible: Studies show that comparing AI-generated responses to recent human survey data helps catch issues with outdated or biased outputs. For new products or fast-changing markets, always run at least a small parallel human test.

• Don’t rely on one-off questions—test multiple wordings and scenarios: AI personas are especially sensitive to how questions are phrased, sometimes giving very different answers for small changes. To avoid misleading findings, run your key questions in varied formats and check for consistent results.

• Go beyond basic demographics when creating personas: Simulations grounded in richer psychological backstories or belief networks (not just age, gender, or location) are much closer to real-world responses. Consider using a “persona builder” step that includes values, attitudes, or past experiences.

• Choose your model intentionally and document what you use: Different AI models, even within the same family (e.g., GPT-3.5 vs GPT-4), can give widely different answers to the same prompt. Always note the version, and if possible, compare more than one to see if your findings hold up.

• Be cautious with time-sensitive or emerging trends: AI models cannot account for events or attitudes that happened after their last training update. For any study involving recent shifts—like new competitors, regulations, or social issues—treat simulated results as preliminary until checked against up-to-date human feedback.

• Use synthetic audiences for prototyping and early-stage testing, not final decisions: AI personas are powerful for exploring ideas, testing survey flows, and identifying obvious problems before expensive launches. But for high-stakes choices, always include a validation step with real people.

📄 Prompts

Prompt Explanation: Demonstrates how to prompt an LLM to role-play the persona of an adult from a specific country for the simulation of cross-cultural differences in psychological traits.

Please play the role of an adult from [the United States/South Korea].

Prompt Explanation: Elicits LLM-based role-play responses by simulating patients with cognitive-behavioral therapy (CBT)-informed models for mental health training scenarios.

You are a patient with [specific symptoms or diagnosis]. Please respond to the therapist's questions using your own words, expressing your feelings and thought patterns as realistically as possible based on your background and experiences.

Prompt Explanation: Instructs the LLM to simulate an opinion survey by responding as a persona with a detailed backstory, aiming to better match human response distributions.

You are [persona’s name], a [age]-year-old [gender] from [country or region], who works as a [occupation] and has the following background: [detailed backstory]. Please answer the following survey questions as this persona would.

Prompt Explanation: Directs the LLM to role-play an agent by seeding it with a single belief and demographic data, leveraging a human belief network for more accurate simulation of opinion formation.

You are an individual with the following demographic profile: [demographics]. Your belief about [topic] is: [seed belief]. Based on this information, answer questions about related social and political topics as you would, considering your belief network and background.

⏰ When is this relevant?

A subscription meal kit company wants to find out how different customer segments respond to a new plant-based menu option. They aim to simulate qualitative feedback from three AI persona groups: health-focused urban singles, busy suburban families, and price-sensitive college students. The team wants to know which aspects of the new menu resonate most and what objections or motivators emerge in each segment.

🔢 Follow the Instructions:

1. Define customer segments: Create brief persona profiles with relevant details for each group. For example:

• Health-focused urban single: 32, city dweller, active lifestyle, values nutrition and wellness, often tries new food trends.

• Busy suburban family: Parent, 40, two children, lives in suburbs, values convenience and family appeal, manages tight schedules.

• Price-sensitive college student: 21, lives off-campus, budgets carefully, prefers easy meals, open to plant-based but wary of cost.

2. Prepare a prompt template for each persona: Use the following structure:

You are a [persona description].

You have just learned about a new plant-based menu option from a subscription meal kit service. The menu features seasonal produce, global flavors, and quick-prep dinners. Meals are priced at $9.99 per serving with flexible delivery.

A market researcher is interviewing you for feedback.

Answer in 3–5 sentences as your persona, focusing on your real interests and concerns.

First question: What is your honest reaction to this new menu option? What stands out or makes you hesitant?

3. Run the simulation for each segment: Input each persona and prompt into your AI tool (e.g., ChatGPT, Gemini, or similar). For richer insights, generate 5–10 responses per persona type by slightly rewording the prompt or using different “randomness/temperature” settings.

4. Ask targeted follow-up questions: For each initial response, ask 1–2 follow-ups, such as:

• Would this menu make you more likely to try or switch meal kits?

• What would convince you to recommend it to friends or family?

• What concerns or dealbreakers would stop you from ordering?

5. Tag and summarize the feedback: Review and label answers with tags like “mentions price,” “interested in nutrition,” “concerned about preparation time,” or “positive about variety.” Identify patterns unique to each segment.

6. Compare findings across groups: Summarize which value propositions (e.g., health, convenience, price) generate the most positive reactions or resistance in each customer type.

🤔 What should I expect?

You’ll see which customer segments are most excited or skeptical about the plant-based menu, what specific features drive interest or hesitation, and how messaging could be tailored for each group. This allows you to refine product positioning, prioritize marketing messages, and identify key objections or motivators to address before launch or additional human research.