Self-consistency Improves Chain of Thought Reasoning in Language Models

postXuezhi Wang, Jason Wei, Dale Schuurmans, Quoc Le, Ed H. Chi, Sharan Narang, Aakanksha Chowdhery, Denny Zhou

Published: 2023-03-07

🔥 Key Takeaway:

The fast, “obvious” way to get reliable answers from AI personas—just ask once and trust the output—actually makes your results less trustworthy; the real shortcut is to deliberately make the AI contradict itself and then trust the answer that keeps coming back, because disagreement among sampled responses is a feature, not a bug, and consensus from chaos is more accurate than any single, “confident” answer.

🔮 TLDR

This paper introduces "self-consistency" as a method to improve reasoning accuracy in large language models, especially when using chain-of-thought (CoT) prompting. Instead of relying on a single answer generated by greedy decoding, self-consistency samples multiple diverse reasoning paths and then selects the most frequent final answer among them. Across arithmetic and commonsense reasoning benchmarks, this method consistently outperformed standard CoT prompting, with accuracy gains as high as +17.9% (GSM8K), +12.2% (AQuA), and +11.0% (SVAMP) on state-of-the-art models like PaLM-540B and GPT-3. Self-consistency outperformed sample-and-rank, beam search, and ensemble methods, and the benefit increased with both the number of sampled paths (up to 40) and model scale. The approach is robust to different sampling strategies, prompt variations, and even helps when prompts are imperfect or in zero-shot settings. Actionable takeaways: when using LLMs for simulated user research or reasoning tasks, generate and aggregate multiple “reasoning paths” per persona or question, then take the majority answer—this boosts both reliability and real-world fidelity of synthetic responses, and can serve as a built-in measure of uncertainty (low agreement signals low model confidence).

📊 Cool Story, Needs a Graph

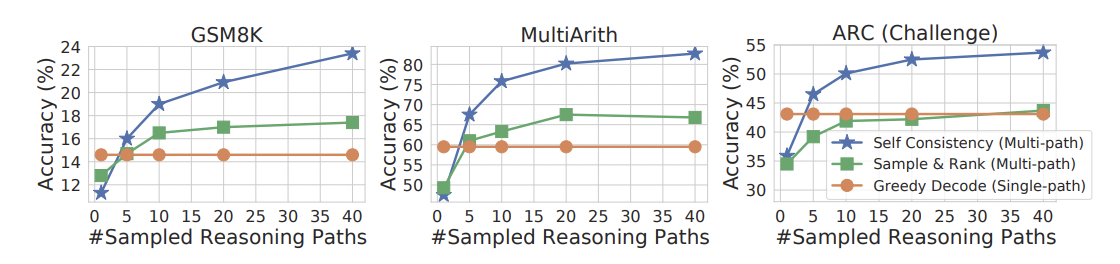

Figure 3: "Self-consistency significantly outperforms sample-and-rank with the same # of samples."

Line plots show self-consistency outperforms both sample-and-rank and greedy decode on three benchmarks as the number of sampled reasoning paths increases.

Figure 3 presents overlaid line charts for GSM8K, MultiArith, and ARC (Challenge), plotting accuracy as a function of the number of sampled reasoning paths for three methods: self-consistency, sample-and-rank, and greedy decode. The figure clearly demonstrates that self-consistency provides the largest and most consistent gains, with accuracy rising sharply and surpassing both sample-and-rank (which gives only modest improvements) and the single-path greedy baseline (which remains flat). This single view directly contrasts the proposed method against leading alternatives, highlighting its superiority across diverse tasks and sampling scales.

⚔️ The Operators Edge

A critical but easily overlooked detail is that the method’s success doesn’t just come from sampling multiple answers—it hinges on the fact that correct reasoning paths, even when phrased differently, tend to converge on the same final answer, while errors are scattered and less likely to align. In other words, the “wisdom of the crowd” effect emerges only because the AI’s errors are diverse but the truths are self-reinforcing, making majority-vote aggregation a powerful filter.

• Why it matters: Most experts might assume that generating more answers just “averages out” noise, but the real reason self-consistency works is that the distribution of model errors is broad and inconsistent, while correct solutions—no matter how they’re worded or reasoned—are more likely to repeat, making consensus a strong indicator of truth. This subtle statistical property is what lets majority-vote outperform even probability-based or weighted approaches, especially as model scale grows.

• Example of use: In a product concept test, a team simulates 20 AI customer interviews for each new idea, then looks for points of strong agreement (e.g., “I would buy this for the convenience,” or “Too expensive for what it offers”). If 15/20 simulated personas independently converge on “I would buy for the convenience,” the team can trust this as a likely real-world signal—even if the exact reasoning or phrasing varies.

• Example of misapplication: A team runs 20 AI simulations per concept but, instead of aggregating by majority answer, picks the response with the highest model probability (or the “best-sounding” answer). This can let through one-off errors or idiosyncratic mistakes, since model confidence doesn’t reliably distinguish truth from plausible-sounding errors—missing the filtering power of collective agreement and leading to overconfidence in spurious outputs.

🗺️ What are the Implications?

• Always collect multiple AI responses instead of just one: Instead of taking the first answer an AI persona gives, sample several different “reasoning paths” for each question and see which answer comes up most often. This simple change boosted accuracy by up to 18 percentage points in benchmark tests.

• Use “majority vote” to pick the final answer: When you have several AI responses, don’t just pick the one that sounds best—let the most common answer win. The majority approach consistently outperformed both picking at random and more complex ranking methods.

• Even a small number of samples helps: You don’t need to generate dozens of responses to see a benefit—sampling just five or ten reasoning paths per question captures most of the gains, so this is practical even for large-scale studies.

• Self-consistency works with any standard AI or prompt: This technique improved results across all tested language models, from open-source to the most advanced commercial AIs, and with both perfect and imperfect prompts, making it broadly applicable to any virtual audience study.

• Get a built-in confidence score for free: If your sampled AI responses mostly agree, you can be more confident in the result. If they’re split, the question itself or the scenario may be confusing or ambiguous—flag these cases for further review or human validation.

• Don’t rely on ensembling or prompt tweaking alone: The study found that simply shuffling prompts or combining answers from multiple models had much less impact than the self-consistency approach, so focus resources on sampling and aggregation, not just prompt engineering.

• Applies to both qualitative and quantitative research: Whether you’re running a brand survey, pricing study, or usability test, using self-consistency to aggregate AI persona responses will yield findings that better reflect real-world human diversity and judgment.

📄 Prompts

Prompt Explanation: The AI was prompted to generate a chain-of-thought style answer to arithmetic and commonsense reasoning questions, providing detailed step-by-step reasoning before delivering the final answer.

Q: If there are 3 cars in the parking lot and 2 more cars arrive, how many cars are in the parking lot?

A: There are 3 cars in the parking lot already. 2 more arrive. Now there are 3 + 2 = 5 cars. The answer is 5.

Q: Janet’s ducks lay 16 eggs per day. She eats three for breakfast every morning and bakes muffins for her friends every day with four. She sells the remainder for $2 per egg. How much does she make every day?

A:

She has 16 - 3 - 4 = 9 eggs left. So she makes $2 * 9 = $18 per day.

She eats 3 for breakfast, so she has 16 - 3 = 13 left. Then she bakes muffins, so she has 13 - 4 = 9 eggs left. So she has 9 eggs * $2 = $18.

This means she she sells the remainder for $2 * (16 - 4 - 3) = $26 per day.

The answer is $18.

The answer is $26.

The answer is $18.

The answer is $18.

Prompt Explanation: The AI was given a set of manually written chain-of-thought exemplars and then prompted to solve new questions by generating reasoning paths that mirror the style of the exemplars.

Q: There are 15 trees in the grove. Grove workers will plant trees in the grove today. After they are done, there will be 21 trees. How many trees did the grove workers plant today?

A: We start with 15 trees. Later we have 21 trees. The difference must be the number of trees they planted. So, they must have planted 21 - 15 = 6 trees. The answer is 6.

Q: Leah had 32 chocolates and her sister had 42. If they ate 35, how many pieces do they have left in total?

A: Leah had 32 chocolates and Leah’s sister had 42. That means there were originally 32 + 42 = 74 chocolates. 35 have been eaten. So in total they still have 74 - 35 = 39 chocolates. The answer is 39.

Q: Jason had 20 lollipops. He gave Denny some lollipops. Now Jason has 12 lollipops. How many lollipops did Jason give to Denny?

A: Jason had 20 lollipops. Since he only has 12 now, he must have given the rest to Denny. The number of lollipops he has given to Denny must have been 20 - 12 = 8 lollipops. The answer is 8.

Prompt Explanation: The AI was provided with multiple-choice commonsense or symbolic reasoning questions and instructed to output a chain-of-thought rationale followed by a final answer.

Q: The man laid on the soft moss and looked up at the trees, where was the man? (a) niagra falls (b) forest (c) waterfall (d) ground (e) tree

A: The answer must be a place where the man is laying down and looking up at trees. Of the above choices, only forest is a place where people lay down and look at trees. So the answer is (b).

Q: Which of these will most likely harm a habitat? (a) planting trees. (b) water pollution. (c) rainfall. (d) sunlight.

A: The answer is (b), water pollution, because it will most likely harm the habitat. The other answers are not likely to harm the habitat.

Prompt Explanation: The AI was asked to answer symbolic reasoning questions, such as string manipulation or logic puzzles, by generating a step-by-step rationale and a final answer.

Q: Which process in the carbon cycle takes the longest to complete? (a) emission of waste. (b) respiration in animals. (c) photosynthesis in plants. (d) formation of fossil fuels.

A: The process that takes the longest time to complete is the formation of fossil fuels, which happens over millions of years. So the answer is (d).

Prompt Explanation: The AI was prompted to answer closed-book and natural language inference questions by providing a rationale and selecting from multiple-choice options, simulating a thoughtful respondent.

Premise:

""A person on a horse jumps over a broken down airplane.""

Based on this premise, can we conclude the hypothesis ""A person is training his horse for a competition."" is true?

OPTIONS:

- yes

- no

- it is not possible to tell

A: The person is not necessarily training his horse. The answer is it is not possible to tell.

Q: does system of a down have 2 singers?

A: System of a Down currently consists of Serj Tankian, Daron Malakian, Shavo Odadjian and John Dolmayan. Serj and Daron do vocals, so the band does have two singers. The answer is yes.

⏰ When is this relevant?

A national bank wants to understand how different types of small business owners would react to a new low-interest loan product, especially in terms of perceived value, trust in the brand, and likelihood to apply. They want to use AI personas representing three key segments: first-time entrepreneurs, established local retailers, and tech startup founders.

🔢 Follow the Instructions:

1. Define persona segments: Write simple profiles for each audience segment reflecting real-world diversity and priorities.

• First-time entrepreneur: 29, recently started an online business, cautious about debt, values clear guidance.

• Established local retailer: 52, owns a neighborhood grocery, loyal to traditional banks, risk-averse, values relationships.

• Tech startup founder: 34, runs a SaaS company, comfortable with digital-first products, ambitious growth plans, values speed and flexibility.

2. Prepare the prompt template for each persona:

You are simulating a [persona description].

Here is the new product being tested: ""A business loan offering 2.9% fixed interest, no fees for early repayment, and same-day approval for amounts up to $250,000. Available to all small business owners nationwide.""

You are being interviewed by a market researcher.

Respond as yourself, in 3–5 sentences, sharing your honest thoughts, feelings, and likely next steps.

First question: What is your first impression of this loan offer?

3. Generate multiple AI responses per persona: For each persona, use an AI model (such as GPT-4) to generate 8–10 unique responses to the initial prompt. Instruct the technical team to set the model to sample (not just pick the top answer) so you get a variety of reasoning paths and tones.

4. Apply self-consistency aggregation: Review the responses for each persona and identify the most frequent or thematically consistent answers (e.g., ""would apply immediately,"" ""concerned about hidden fees,"" ""needs more info before deciding""). Optionally, tag responses by theme.

5. Ask one follow-up question per persona: Use a follow-up like: ""What concerns or questions would you want answered before deciding to apply?"" Repeat the sampling for each persona and again aggregate the most common or representative concerns.

6. Compare and summarize findings: For each segment, summarize the dominant reactions and top concerns, highlighting differences and overlaps. For example, first-time entrepreneurs may need more hand-holding, while tech founders might focus on approval speed.

🤔 What should I expect?

The team will see which business owner types are most receptive to the product, what drives trust or hesitation, and what information or messaging gaps exist before launch. This allows for targeted marketing, improved FAQ design, and more efficient follow-up research, all without needing to recruit actual participants for initial qualitative insights.