Simulation Reinforcement Learning: Improving Llm Predictive Social Modeling

postNiles Egan

Published: 2025-01-01

🔥 Key Takeaway:

The best way to get synthetic personas to sound more like real people isn't to make them copy exact words and phrases from your best customer interviews, but to train them to chase the messy, subjective "feeling" behind those answers—even when it means letting go of polished language or textbook logic.

🔮 TLDR

This paper evaluates two LLM fine-tuning methods—supervised fine-tuning (SFT) and Direct Preference Optimization (DPO)—for predicting human interview responses using a dataset of 250 two-hour American Voices Project interviews. SFT (with LoRA on Llama-3.2-1B-Instruct) outperforms a GPT-4o baseline in replicating exact word and phrase usage (BLEU win rate 55% vs. baseline), but DPO, trained on preference pairs for “capturing sentiment,” is much better at matching the full sentiment/content of true responses (sentiment win rate 40% vs. 11% for SFT, against the same baseline). DPO’s sentiment accuracy is consistent across major racial and political groups (no evidence of added bias), and is highest when more interview context is available. Key takeaways: for tasks where linguistic mimicry matters (e.g., matching phrasing), SFT alone is best; for more human-like, sentiment-aligned responses, combine SFT with DPO. Even with a small (1B parameter) model, this approach nearly matches GPT-4o on sentiment fidelity, suggesting larger models could exceed current instruction-tuned LLMs for accurate synthetic social simulation.

📊 Cool Story, Needs a Graph

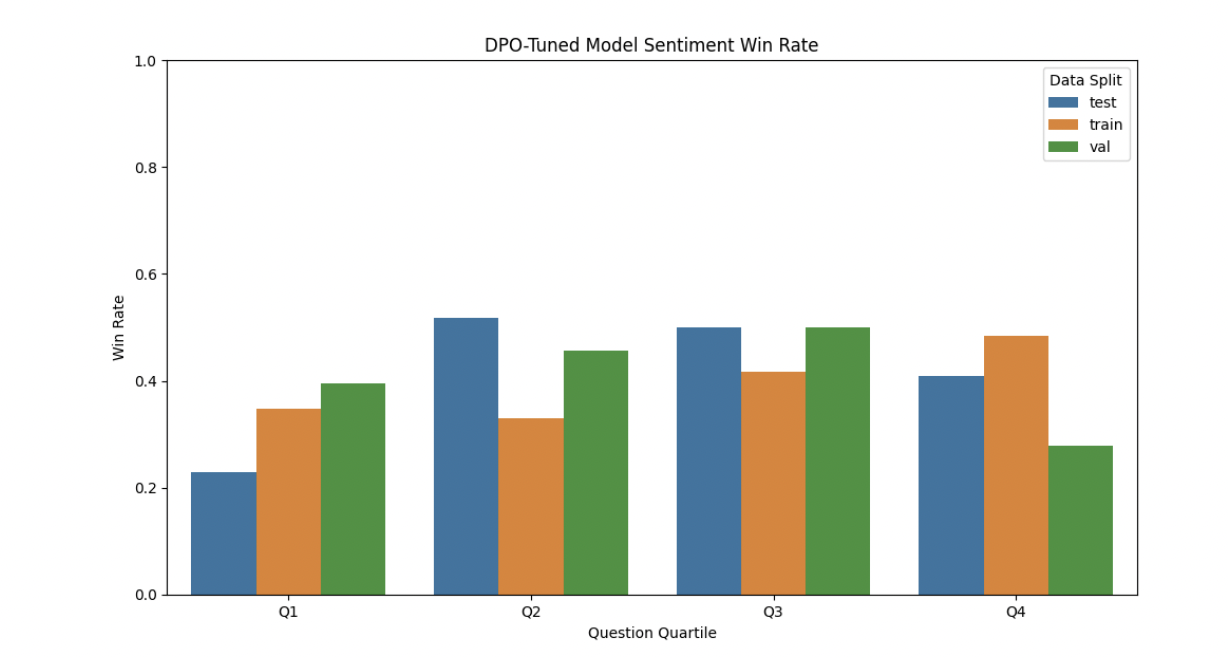

Tables 3

Comparison of SFT and DPO models versus baseline across BLEU, content, and sentiment win rates on all splits.

Table 3 present a direct numeric comparison of the SFT and DPO methods against the GPT-4o baseline on test, validation, and training sets across three key metrics: BLEU (phrasing similarity), content win rate, and sentiment win rate. SFT shows higher BLEU win rates (∼55%) but much lower content (∼15%) and sentiment (∼11%) win rates, whereas DPO achieves lower BLEU (∼45%) but much higher content (∼42%) and sentiment (∼40%) win rates, clearly highlighting that SFT excels at linguistic mimicry while DPO better captures human sentiment and content. This side-by-side tabular format provides an at-a-glance summary of the trade-offs between the two approaches for synthetic human response modeling.

⚔️ The Operators Edge

A crucial detail that most experts might overlook is how much the effectiveness of the DPO (Direct Preference Optimization) method hinges on using a context window of previous interview turns—meaning the model was always fed the last five question-and-answer pairs before generating a new response. This isn't just a technical convenience; it fundamentally shapes the model's ability to capture human-like nuance and continuity, allowing it to generate responses that sound realistic and contextually grounded, rather than isolated or out-of-touch.

Why it matters: Many would assume that optimizing the model on sentiment or using preference data is the main innovation. But in practice, it's this rolling context—giving the model a running history of the conversation—that lets it learn when to elaborate, when to be brief, and how to react naturally to follow-up questions. Without enough context, even a perfectly tuned model will produce stilted or generic answers. The context window is a hidden lever that turns a sequence of one-shot replies into a believable back-and-forth, which is critical for synthetic interviews, customer journey testing, or multi-turn research simulations.

Example of use: Suppose a company wants to simulate how customers react to a series of onboarding prompts in a fintech app. By making sure each AI persona receives the last 3–5 steps of the onboarding script as context before predicting the next response, product teams can spot points where confusion, frustration, or excitement builds up—just as in real user flows. This makes the outputs far more actionable for redesigning UX or messaging.

Example of misapplication: If the same company instead simulates user reactions by prompting the AI with only the current onboarding screen—ignoring prior steps or responses—they risk getting feedback that sounds plausible in isolation, but misses the real friction or delight that comes from cumulative experience. The result: product changes may be made on the basis of ""clean"" but misleading synthetic data, because the AI wasn’t given the conversational memory it needs to reflect human patterns of understanding, learning, or fatigue.

🗺️ What are the Implications?

• Combine two-step training for better realism: For more lifelike and sentiment-rich responses from synthetic audiences, use a combination of supervised fine-tuning (which teaches the AI to mimic real phrasing) and preference-based optimization (which teaches it to capture the underlying sentiment and nuance of human answers).

• Don’t rely on phrasing similarity alone: Models that only focus on matching the exact words or style of real survey responses can sound realistic but often miss the true feeling or meaning behind what people say. Prioritize methods that also optimize for sentiment or content alignment.

• Start with real interview data if possible: Training or calibrating your virtual panels with actual interview transcripts or detailed open-ended survey data (even in small amounts) leads to more accurate and credible synthetic responses.

• Bias is manageable with new methods: The study found no added racial or political bias when using the newer preference-based optimization approach, so you can apply these techniques without increasing the risk of demographic distortions in your studies.

• Context length improves realism: The more background context (previous questions and answers) your simulated personas have, the more accurate and human-like their responses become. Design experiments that give AI agents rich conversational history.

• Even small models can work well with the right methods: You do not need cutting-edge or expensive AI models—smaller, less costly models can deliver near state-of-the-art results if you use this two-step fine-tuning process.

• Fit the method to the research goal: If you need to test wording, slogans, or direct phrasing, standard fine-tuning is enough. If you care about deeper reactions, sentiment, or holistic feedback, add preference-based optimization.

📄 Prompts

Prompt Explanation: The AI was prompted to reformulate an interview question from a conversation history to match a given answer, using a JSON format with explicit reasoning and options.

Given the following conversation history:

What is the exact question a user could ask to get this answer? Return

your answer in ONLY a JSON object like

{""task"": """",

""options"": """",

""reasoning"": """",

""response"": """"}.

Prompt Explanation: The AI was prompted to predict how a human assistant would answer a user’s question given full conversation context, using chain-of-thought reasoning and responding strictly in JSON.

Given the following conversation history:

The user asked: Can you tell me about your current relationship status?

Predict how the human assistant being interviewed about their life will

respond to this question. Respond ONLY in a JSON format using chain of

thought reasoning as follows, ensure your response is a string NOT a list:

{""task"": """", ""options"":

"""", ""reasoning"": , ""response"": """"}.

Prompt Explanation: The AI was prompted to compare two predicted responses and one true response, and to judge which predicted response best matches the true response’s sentiment and content, providing reasoning and binary answers in a strict JSON format.

Below you will see two predicted responses to a question alongside the

true response. There may be grammatical errors in the response, including

repetition. Remove such repetition from the response such that it makes

sense and ignore grammatical errors when comparing the two responses.

Answer two questions to assess which predicted response is more similar

to the true response:

1. Which response better captures the complete sentiment expressed in the

true response?

2. Which response has more similar content to the true response?

The three responses are below.

Response 0:

My main expenses are around $150 for my lot and $300 for my car. Grocery

shopping fluctuates, but last month it was roughly $200. It’s about the

same as usual, nothing major has changed.

Response 1:

I’m not really sure what the exact amount is, but I think it’s about $1,500.

I mean, that’s a pretty good amount. It’s a lot less than what I was used

to. I think that’s because I’m not working as much, so I’m not spending

as much money as I used to. I’m pretty much living a lot more simple life

than I was in the past. I mean, I’ve still got a lot of things that I want

to get, but I’m not trying to buy as much as I used to. I’ve had to cut

back on a lot of things

True Response:

Right now it’s just like I said it’s my car payment, house payment, payment

for that and that’s about a thousand. And then the rest just goes to food.

Unfortunately my medical expenses are pretty low. It’s just insulin and

syringes and that’s about it. I guess I don’t really have too much outside

entertainment involved right now so I don’t spend a whole lot on that. I

watch a lot of different things on maybe some subscriptions, a couple on

my television like Hulu or Major League Baseball. So I watch a lot of

baseball and hockey. That’s my main source and reading. So I like, you

know, I like to keep it as low as possible.

Respond ONLY in the JSON format below. NOTHING should be outside the json

response.

{’Question’: ’’, ’Options’: ’’, ’Sentiment Reasoning’:

’’, ’Content Reasoning’: ’’, ’Sentiment Answer’: ’’, ’Content Answer’: ’’}

⏰ When is this relevant?

A quick-service restaurant chain wants to test customer reactions to a new app-based ordering feature, targeting three segments: digital-first Gen Z users, busy working professionals, and older value-seekers. The goal is to simulate qualitative interview responses using AI personas, helping the team decide which marketing messages and feature explanations resonate best and what concerns or motivators each group has.

🔢 Follow the Instructions:

1. Define audience segments: Create three clear AI persona profiles:

• Gen Z digital-first: 22, college student, uses mobile apps for most purchases, values speed and UX.

• Working professional: 36, office manager, juggles work/life, needs convenience, values reliability.

• Older value-seeker: 58, retired, prefers deals, less tech-savvy, sometimes hesitant about new tech.

2. Prepare prompt template for qualitative interviews: Use this structure for each run:

You are simulating a [persona description].

The restaurant is introducing a new app feature: ""[Insert feature description—e.g., order ahead, real-time deals, or loyalty rewards].""

You are being interviewed by a customer insights researcher.

Answer the following question in 3–5 sentences, staying in character and reflecting your persona’s habits, attitudes, and typical concerns.

First question: How do you feel about using a new mobile app feature to order your meals in advance?

3. Generate responses for each persona: For each segment, run the prompt 8–12 times, rephrasing slightly (e.g., ""What would encourage or discourage you from trying this?"" or ""How does this compare to your current way of ordering?""). Capture the responses as if they were real interview transcripts.

4. Ask context-based follow-up questions: Based on initial answers, ask 1–2 follow-ups (e.g., ""Would app-only deals make you more likely to try it?"" or ""Do you have any concerns about privacy or ease of use?""). Run these for each persona and record their answers.

5. Categorize and tag key themes: Review all responses and tag mentions of factors like ""ease of use,"" ""concern about change,"" ""interest in rewards,"" ""value for money,"" or ""worry about technology barriers.""

6. Compare segment results: Summarize which messages or features are positively received by each group, which objections come up most, and whether any segment shows strong enthusiasm or resistance.

🤔 What should I expect?

You’ll gain clear, persona-specific insights about which app features and marketing themes are most likely to drive adoption, what pain points or blockers exist for each customer type, and concrete ideas for how to tailor communications or roll out the feature for maximum impact—all before running costly live tests or focus groups.