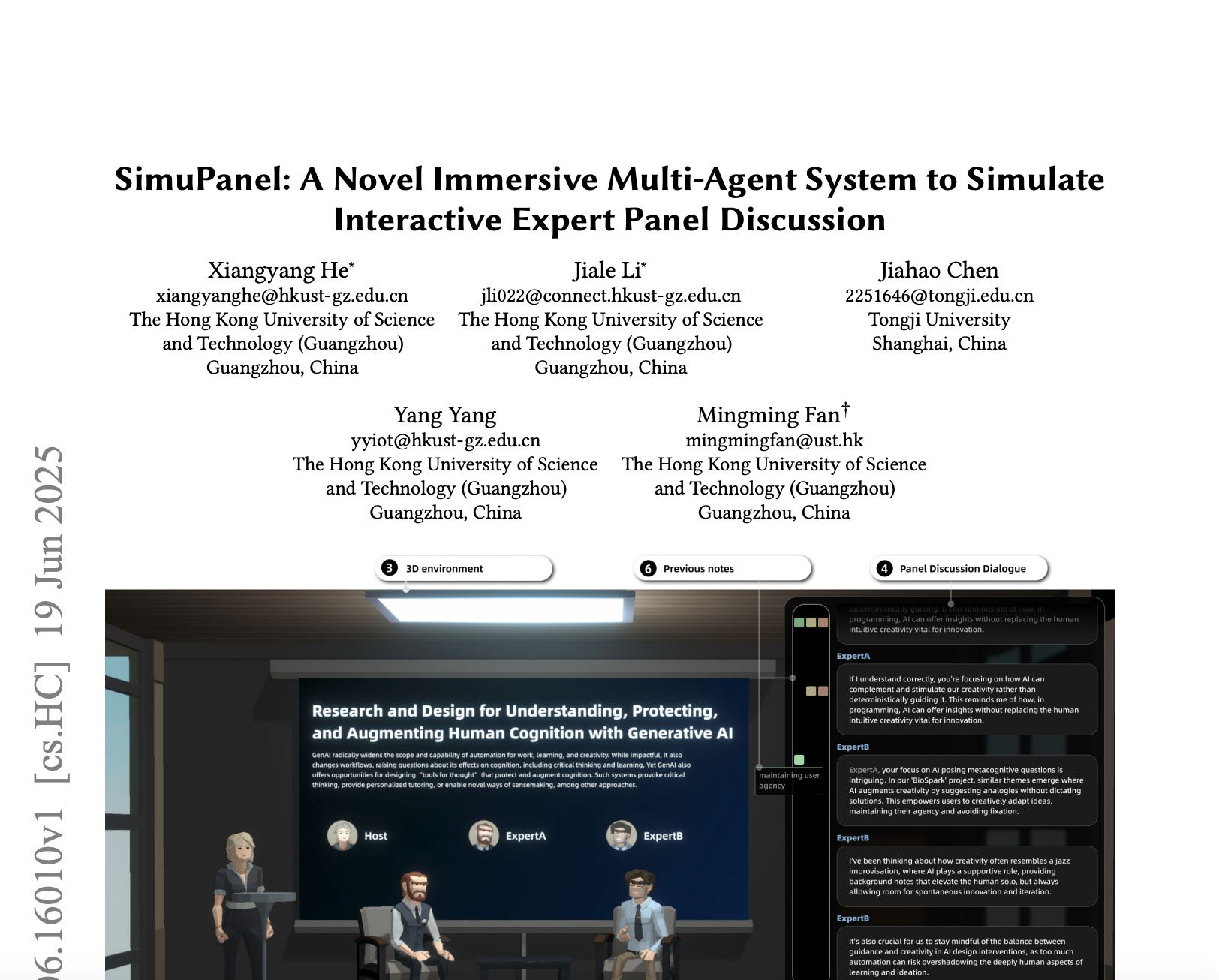

SimuPanel: A Novel Immersive Multi-Agent System to Simulate Interactive Expert Panel Discussion

postPublished: 2025-06-19

🔥 Key Takeaway:

If you want your AI personas to generate the most authentic, nuanced feedback, don't just make them "smarter" or pile on more data—force them to argue, critique, and disagree like real people in a panel; it's the friction, not the facts, that unlocks real-world insight.

🔮 TLDR

SimuPanel is a system that simulates academic panel discussions using LLM-based multi-agent interactions, where each expert agent is built with a two-layer persona (data-driven domain knowledge plus model-generated research interests) and modular reasoning processes (recall, analysis, evaluation, inference). Technical evaluation (Table 1, page 7) shows that panels using the full reasoning chain (all modules combined) outperform simpler setups by 7–31 points across six dimensions (specificity, relevance, flexibility, coherence, informativeness, depth of analysis), with especially large gains in informativeness and analytical depth. A user study with 10 graduate students found 8/10 showed clear learning gains and the system scored highly on usability (mean SUS = 88), but some participants noted the simulated conversations could become repetitive or overly abstract, especially for users lacking prior knowledge. Actionable insights: to improve realism and informativeness in simulated group discussions, use integrated multi-stage reasoning (not just recall or demographics), ground personas in both factual and epistemic traits, and structure dialogue flow with a host agent that actively moderates for balance, depth, and engagement. Systems like this are best for users with some background knowledge seeking new perspectives or inspiration, less so for those needing concrete step-by-step problem-solving.

📊 Cool Story, Needs a Graph

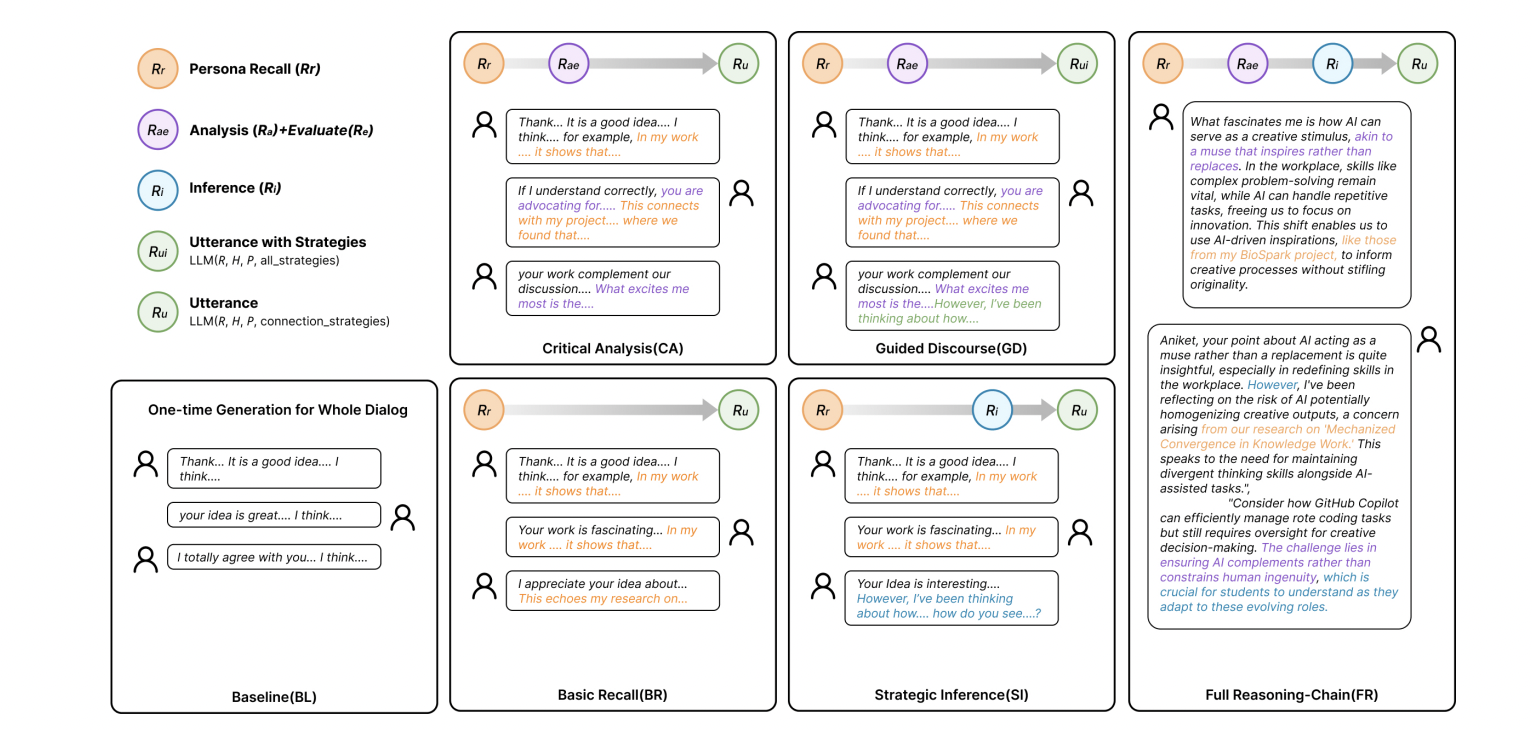

Figure 2: "The figure illustrates the reasoning workflows of the six strategies evaluated in our ablation study."

Visual comparison of reasoning strategies and dialogue outputs for all baselines and the proposed method.

Figure 2 on page 5 presents a side-by-side diagram of all six panel simulation strategies, from the simplest baseline to the full reasoning chain proposed method. Each box displays the workflow components (e.g., recall, analysis, inference) and provides representative dialogue samples, using color-coded highlights for each module's contribution. This layout makes it easy to see at a glance how the stepwise addition of reasoning modules (recall, analysis, evaluation, inference) differentiates the proposed approach from baselines, and how these differences manifest in richer, more interactive dialogue.

⚔️ The Operators Edge

A detail that’s easy to miss but crucial to why SimuPanel works is the way it combines a multi-stage reasoning pipeline with “discussion strategies” that are actively selected and varied at each turn—not just having each agent answer from their own data, but having them explicitly choose whether to question, agree, critique, or synthesize in response to what others say (see Figure 2 and the ablation study, pages 5–7). This architecture forces agents to interact like real panelists, surfacing disagreements, consensus, or new directions, rather than drifting into repetitive, generic answers.

Why it matters: Most AI-driven market research or user testing simulations focus on persona accuracy or knowledge recall, but this study shows the real leap in realism and insight comes from modeling the “moves” of conversation itself. By making the agents dynamically pick strategies—sometimes challenging, sometimes building on others—they generate the kind of friction, convergence, and emergent ideas that real groups produce and that often lead to actionable, non-obvious findings for businesses.

Example of use: In a simulated feedback session for a new consumer electronics product, you could set up AI personas to not only give their own opinions but also react to each other’s statements using a set of explicit strategies (e.g., “raise a concern about privacy,” “agree and add a personal anecdote,” “challenge a claim about price”). This can reveal whether certain objections are robust, which ideas quickly gain consensus, or what messaging triggers debate—all crucial for refining product positioning or anticipating market pushback.

Example of misapplication: If you instead prompt each persona independently, asking for their feedback in isolation without any inter-agent interaction or strategy selection, you’ll get lots of agreeable, surface-level responses that sound plausible but miss the group dynamics that drive real decision-making. This can lead stakeholders to overestimate support or miss hidden objections, because the simulation never surfaces the tension points or persuasive cascades that often make or break products in the market.

🗺️ What are the Implications?

• Build personas with layered backgrounds, not just demographics: Simulations that model both the detailed expertise (e.g., work history, research interests) and higher-level beliefs of synthetic participants resulted in richer, more realistic group responses than those using only surface-level traits.

• Use multi-step, reasoning-driven prompts for AI personas: The most accurate and human-like results were achieved when simulated agents followed a structured thought process—recalling relevant knowledge, analyzing, evaluating, and then responding—rather than just giving one-shot or shallow answers.

• Include a “moderator” or host agent to keep group feedback on track: Simulations with an active host that guides discussion, balances participation, and keeps focus on the topic produced more coherent and actionable insights, similar to well-run focus groups.

• Don’t rely solely on basic recall or randomized responses: Experiments using only generic recall of information or random persona assignment consistently underperformed, missing context and nuance needed for real-world prediction.

• Expect the best ROI in early-stage ideation, trend spotting, and open-ended research: These synthetic panels are strongest when used to surface new ideas, test broad reactions, or explore “what if” scenarios—especially when the target audience already has some foundational knowledge.

• For detailed step-by-step or highly technical studies, supplement with human data: The simulated approach was less effective for tasks needing granular, methodical breakdowns (like usability audits or technical walkthroughs), so use a hybrid strategy for these cases.

• Invest in structuring your synthetic audience and study design upfront: The architecture of your simulated panel—how you build personas, set up the dialogue, and moderate the flow—matters more for realism and accuracy than which specific AI model you use.

📄 Prompts

Prompt Explanation: Baseline — tests one-shot generation for the whole dialog without persona or reasoning workflow.

Persona: ... (persona description)

Generate a panel dialogue on {topic} as a single expert agent, producing the entire conversation in one step without using any reasoning or persona modules.

Prompt Explanation: Basic Recall — tests minimal reasoning by retrieving relevant knowledge before generating responses.

Persona: ... (persona description)

Given the following topic: {topic}

Step 1: Recall any relevant knowledge or experience from your background related to the topic.

Step 2: Generate your response to the panel discussion based only on this recalled knowledge.

Prompt Explanation: Critical Analysis — expands on Recall by adding analysis and evaluation before response.

Persona: ... (persona description)

Given the following topic: {topic}

Step 1: Recall relevant knowledge and experience from your background related to the topic.

Step 2: Analyze and evaluate the recalled information, highlighting strengths, weaknesses, and implications.

Step 3: Generate your response to the panel discussion based on your critical analysis.

Prompt Explanation: Guided Discourse — lets the agent choose from explicit communication strategies after critical analysis.

Persona: ... (persona description)

Given the following topic: {topic}

Step 1: Recall relevant knowledge and experience from your background related to the topic.

Step 2: Analyze and evaluate the recalled information.

Step 3: Choose a communication strategy for this turn: (a) question, (b) answer, (c) scholarly agreement, (d) constructive critique, or (e) synthesis.

Step 4: Generate your response to the panel discussion, clearly indicating the selected strategy.

Prompt Explanation: Strategic Inference — after recall, justifies and selects a discourse strategy based on expertise before responding.

Persona: ... (persona description)

Given the following topic: {topic}

Step 1: Recall relevant knowledge and experience from your background.

Step 2: Consider and select the most appropriate discourse strategy for this turn (question, answer, scholarly agreement, constructive critique, or synthesis), justifying your choice based on your expertise and beliefs.

Step 3: Generate your response to the panel discussion using the selected strategy.

Prompt Explanation: Full Reasoning-chain — combines all components: recall, analysis, evaluation, strategic inference, and explicit strategy-driven utterance generation.

Persona: ... (persona description)

Given the following topic: {topic}

Step 1: Recall relevant knowledge and experience from your background.

Step 2: Analyze and critically evaluate the recalled information, considering strengths, weaknesses, and implications.

Step 3: Select the most appropriate discourse strategy (question, answer, scholarly agreement, constructive critique, or synthesis), explaining your reasoning.

Step 4: Generate your response to the panel discussion using the selected strategy and incorporating insights from your analysis.

⏰ When is this relevant?

A financial services company wants to test how different small business owner segments would react to a new digital lending product. The goal is to simulate realistic panel-style feedback using AI personas—mirroring a group interview—to surface objections, positive reactions, and feature requests across three types: tech-savvy urban founders, cash-flow-focused rural shopkeepers, and growth-oriented franchisees.

🔢 Follow the Instructions:

1. Define audience segments: Draft concise persona profiles for each segment based on real-world customer research:

• Tech-savvy urban founder: 32, operates an e-commerce startup, values fast digital tools, open to new technology.

• Cash-flow-focused rural shopkeeper: 54, owns a hardware store in a small town, wary of debt, prefers face-to-face service.

• Growth-oriented franchisee: 42, runs several fast-food franchises, experienced with bank loans, seeks scalable solutions.

2. Prepare the group panel simulation prompt template: Use this template to simulate a roundtable discussion:

You are participating in a group panel as [insert persona: e.g., a tech-savvy urban founder, a cash-flow-focused rural shopkeeper, or a growth-oriented franchisee].

A new digital lending product is being introduced: ""InstantFlex Loan""—an online loan with same-day approval, customized repayment options, and no paperwork required. The product is designed for small businesses and can be managed entirely through a mobile app.

A moderator will ask for your honest reactions, concerns, and questions. Please respond in 2–4 sentences, staying true to your persona's priorities and style.

First question: ""What is your initial reaction to this new lending product? What excites or worries you most about it?""

3. Run the prompt for each persona: Enter the prompt for each segment in a language model (like GPT-4), generating at least 3–5 responses per persona to capture a range of possible opinions and conversational styles.

4. Simulate panel discussion flow: For each segment, use the following follow-up prompts to deepen the discussion:

• ""How would this product compare to other financing options you've used?""

• ""What features would you want added or changed to make it more appealing?""

• ""How likely would you be to recommend this to peers in your industry? Why or why not?""

Chain the responses as if they are taking turns in a real group setting.

5. Tag and summarize key themes: Review all responses and tag with simple labels such as ""enthusiastic about speed,"" ""distrusts digital process,"" ""requests lower fees,"" or ""concerned about support."" Note any segment-specific objections or repeated positive points.

6. Compare and report: Create a short summary for business stakeholders showing which segments are most receptive, which features or messages resonate, and where resistance or misunderstandings are most common. Use example quotes for realism.

🤔 What should I expect?

You will get a realistic, segment-by-segment readout of likely reactions to the new product, understand which audience is easiest to convert, which objections need to be addressed in future campaigns, and what feature or policy adjustments might improve uptake—all without running a live focus group.