DSPy Primer by Mike Taylor

Listen to this behind the scenes impromptu training between Mike and the Every.to team. Mike gives an introduction to the DSPY framework.

Explore our latest articles and insights.

Showing 10-18 of 53 articles

Listen to this behind the scenes impromptu training between Mike and the Every.to team. Mike gives an introduction to the DSPY framework.

Good Innovation overcame the constraints of small budgets and limited sample sizes by integrating AskRally into their research process, scaling validation from a handful of interviews to 100+ participants. This hybrid approach has accelerated timelines, cut costs, and given UK charities more confident insights to guide innovation.

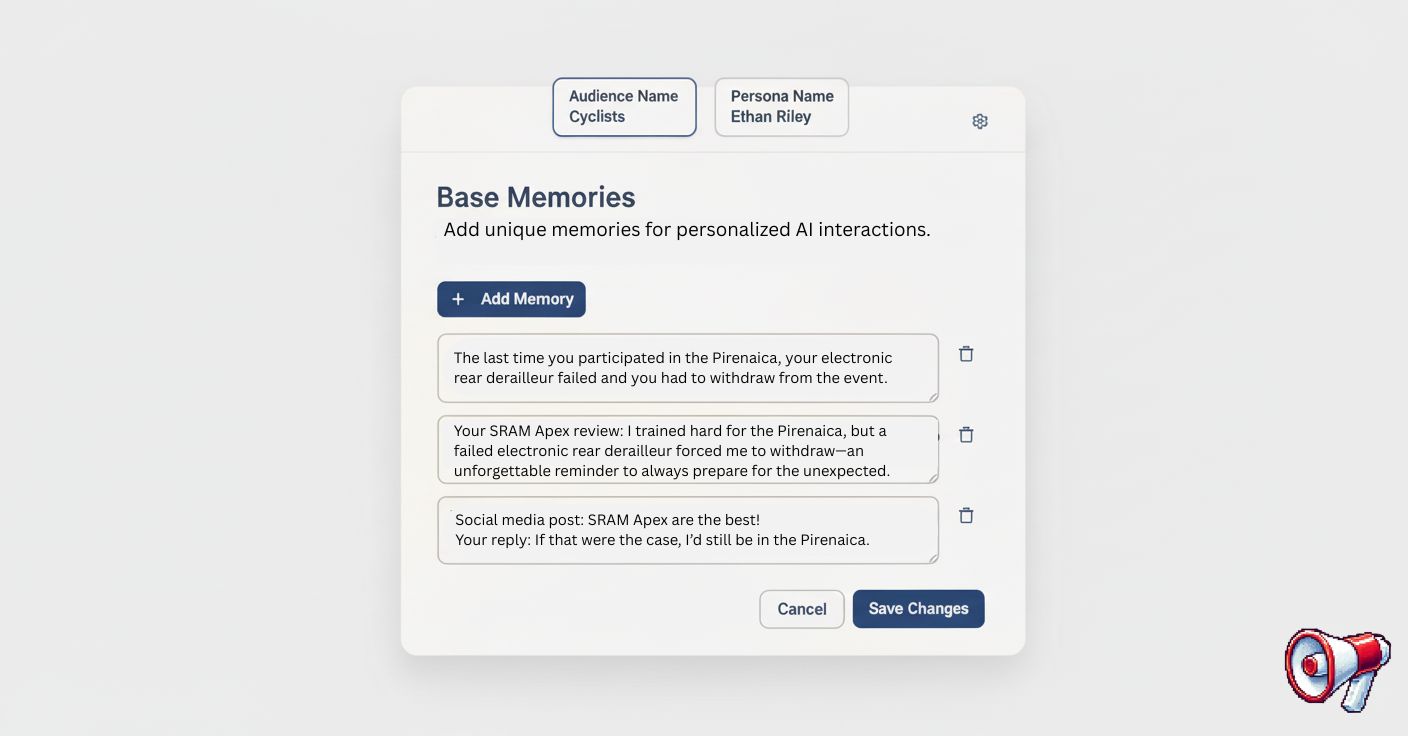

Give your AI personas personalized memory without the manual setup hassle.

Making AI assistants feel genuinely conversational isn't magic, it's methodical engineering.

Researchers often face the limitation that once a study ends, the insights are frozen in a static report. Study boosting solves this by transforming real survey data into living AI personas, allowing teams to extend research, test new scenarios, and localize messaging with authentic, data-grounded voices.

A strategic agency helped a client avoid costly missteps by using AskRally’s synthetic testing to uncover hidden consumer segments and decision drivers before launch. By replacing assumptions with real pre-launch intelligence, they reshaped pricing, messaging, and media strategy—expanding market reach and improving spend efficiency.

Following last week's behind-the-scenes look at our video reaction simulator, here are a few more examples that highlight how AI personas respond to different types of content. From tech commentary to TikTok clips, these simulated reactions offer a glimpse into the diverse ways people engage with Reels.

Most founders guess their pricing using intuition or competitor benchmarks, unknowingly leaving massive revenue on the table or scaring away customers. Rally uses AI to make professional-grade Van Westendorp pricing analysis accessible for just $50, helping startups price smarter, faster, and without the $5,000+ cost of traditional market research.

Before flying a paraglider 430 kilometers across the Pyrenees, I rehearsed the entire journey in my head using nothing but Google Earth on an iPhone. It changed how I understand preparation forever. That same mindset led me to build a tool that lets you watch how people might react to your message before it matters, turning AI personas into a flight simulator for human empathy.